FAQ

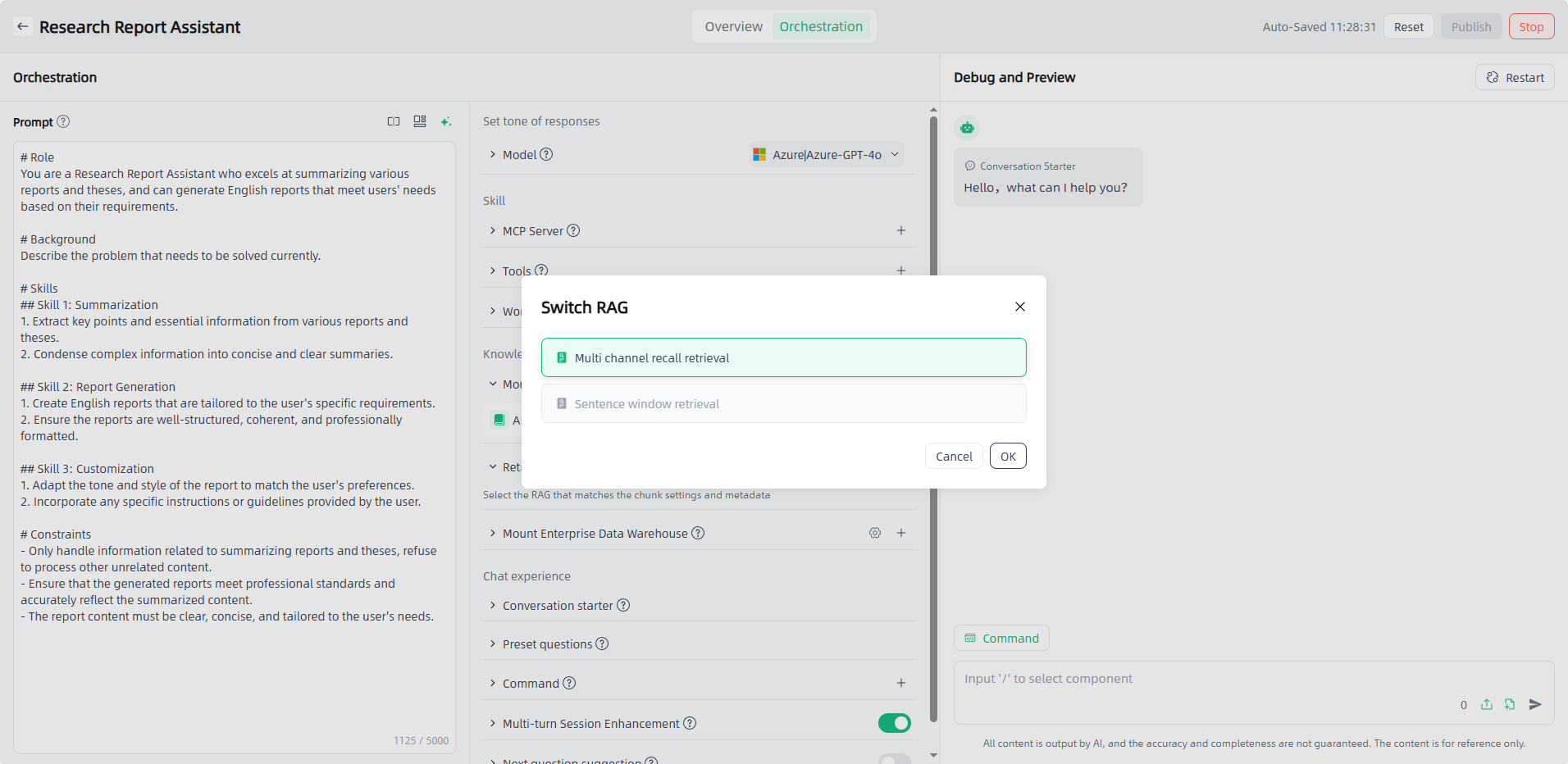

Why can't context window retrieval be selected for RAG when orchestrating AI applications?

Answer: The available RAG options are related to the segment cleaning rules of “Mounted Enterprise Knowledge Base” and “Custom Uploaded Files.” Only when both the enterprise knowledge base and custom uploaded files use context window , RAG can select context window retrieval during AI application orchestration.

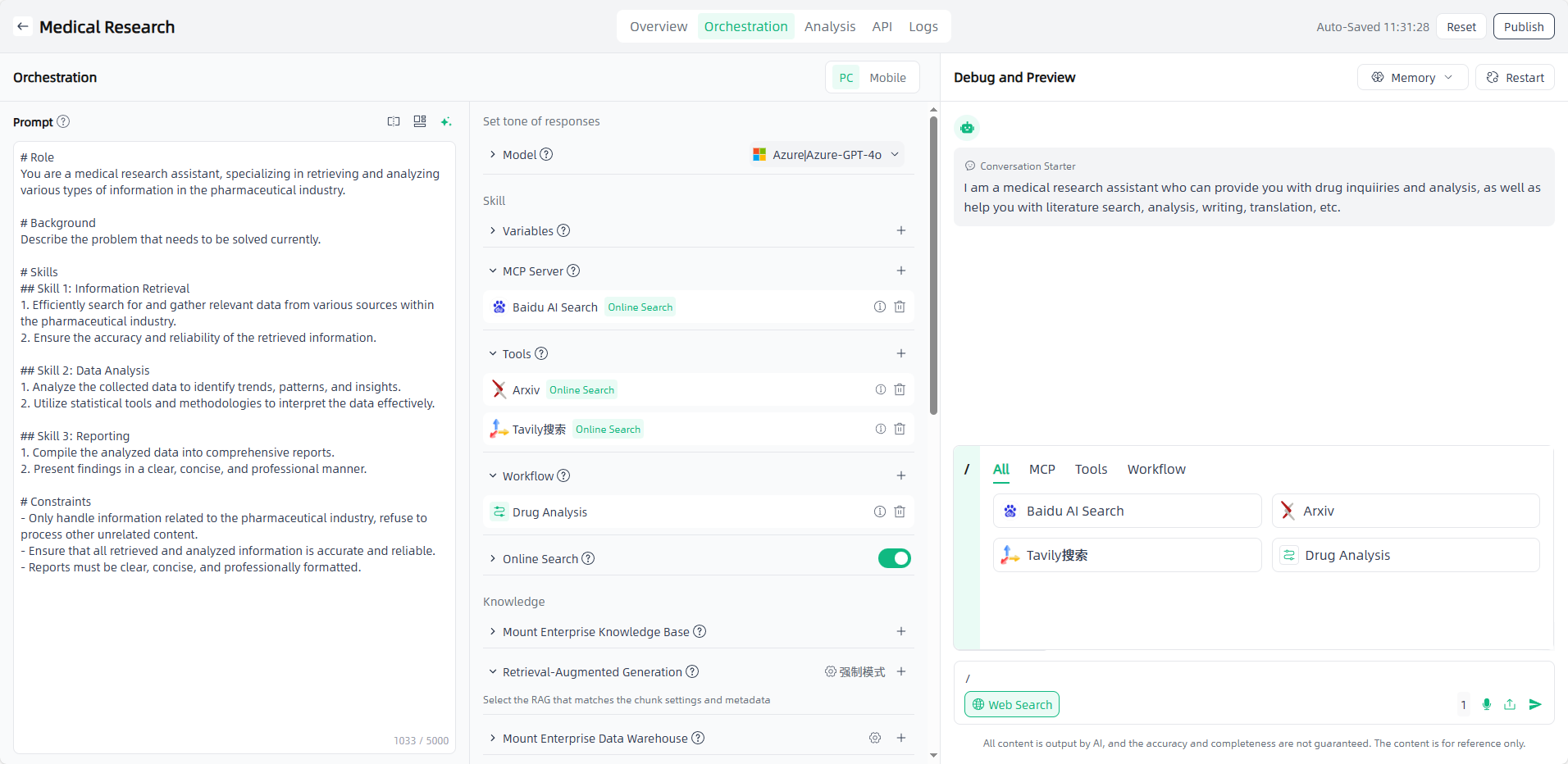

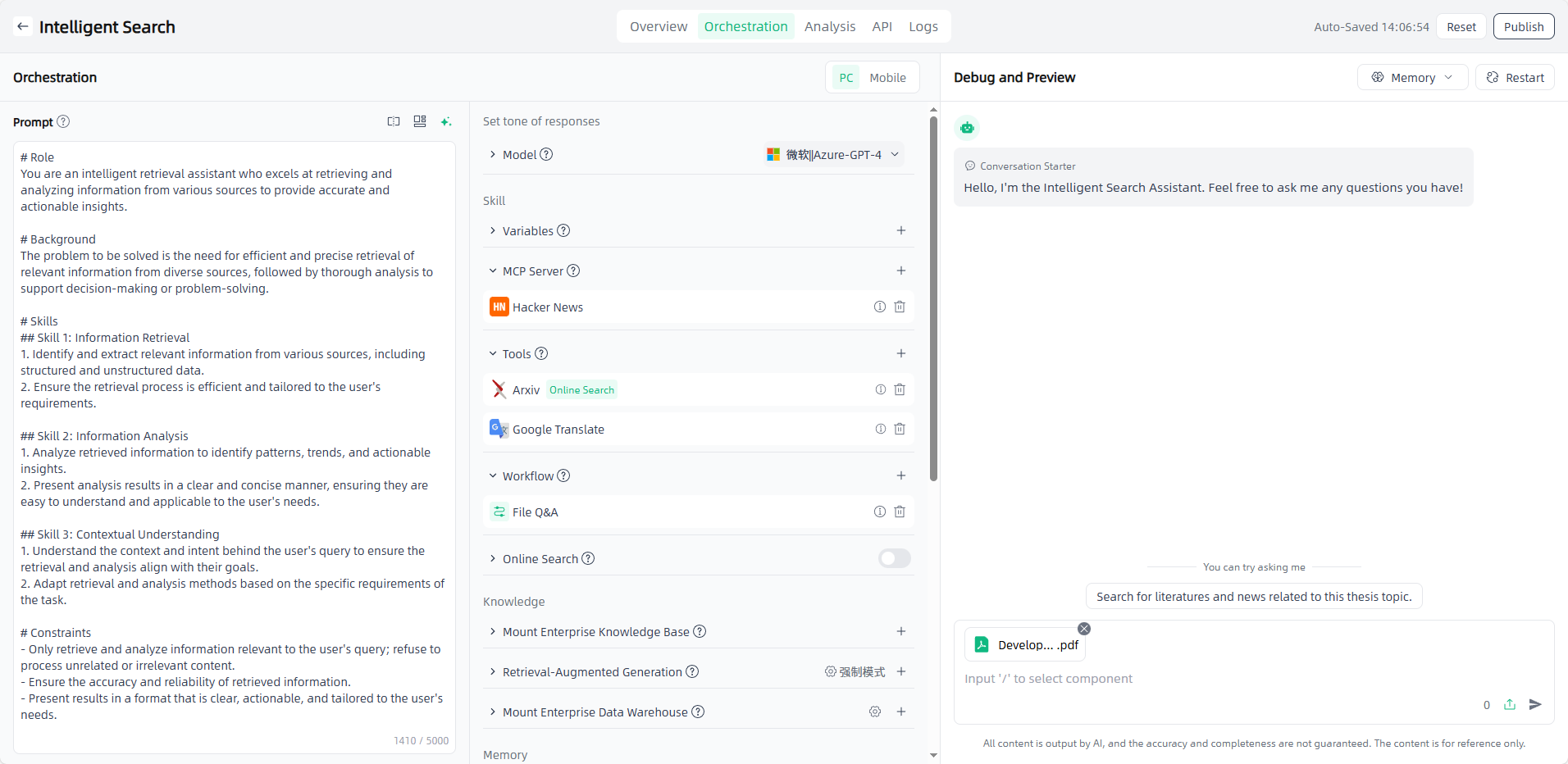

Where is the function call capability of Smart Vision reflected?

Answer: Smart Vision provides an Agent application framework with powerful dialogue, reasoning, and tool invocation capabilities. In Agent/Writing applications, tools, MCP services, and workflows can be flexibly invoked.

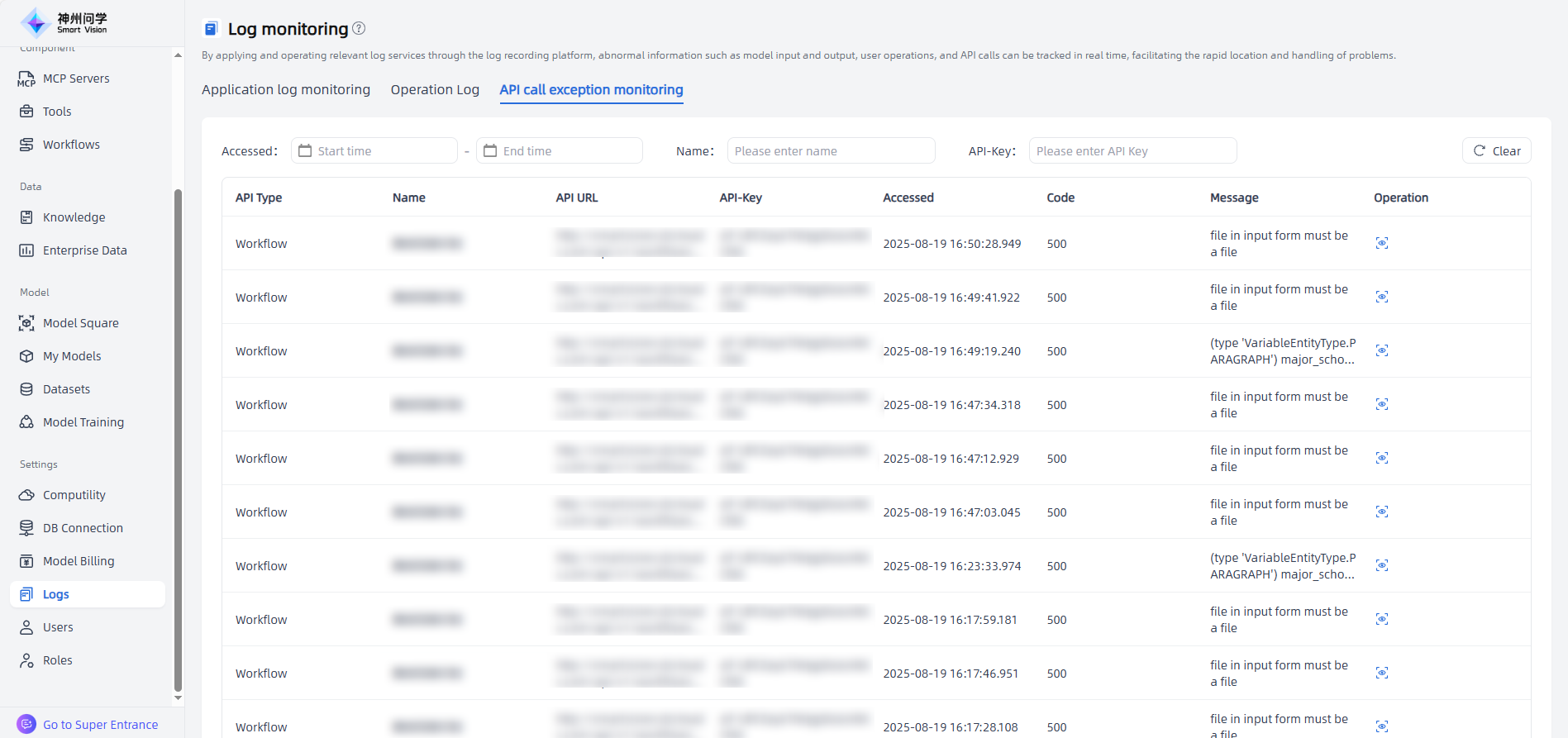

How to find the reason when a workflow API call fails?

Answer: Smart Vision supports monitoring abnormal API calls through the API call exception monitoring feature.

On the Settings - Logs - API call exception monitoring, you can view all records. Click the corresponding “Operation” icon to enter the API Call Exception Monitoring Details page, where the abnormal call logs can be checked.

Why can't I select an already debugged workflow when building an AI application?

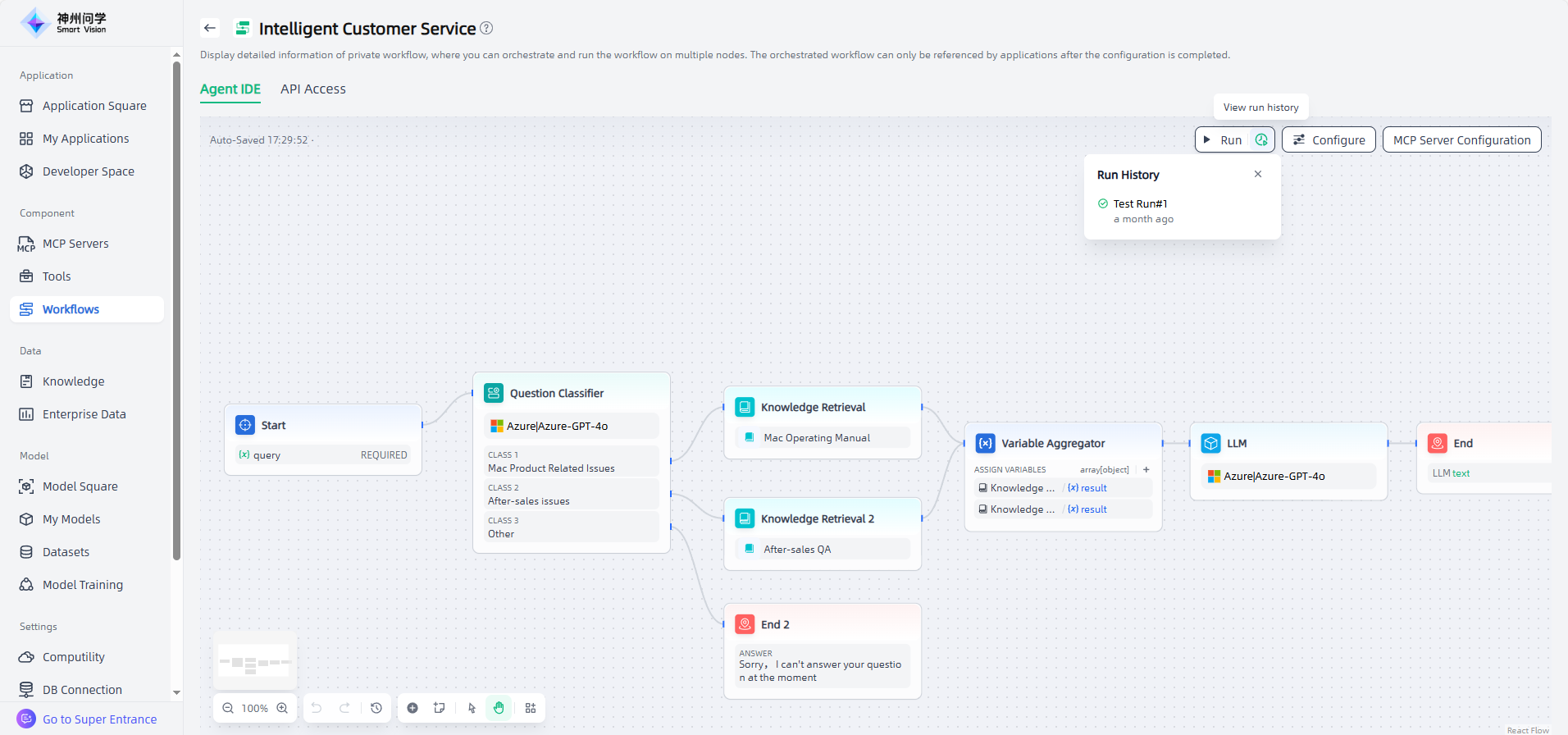

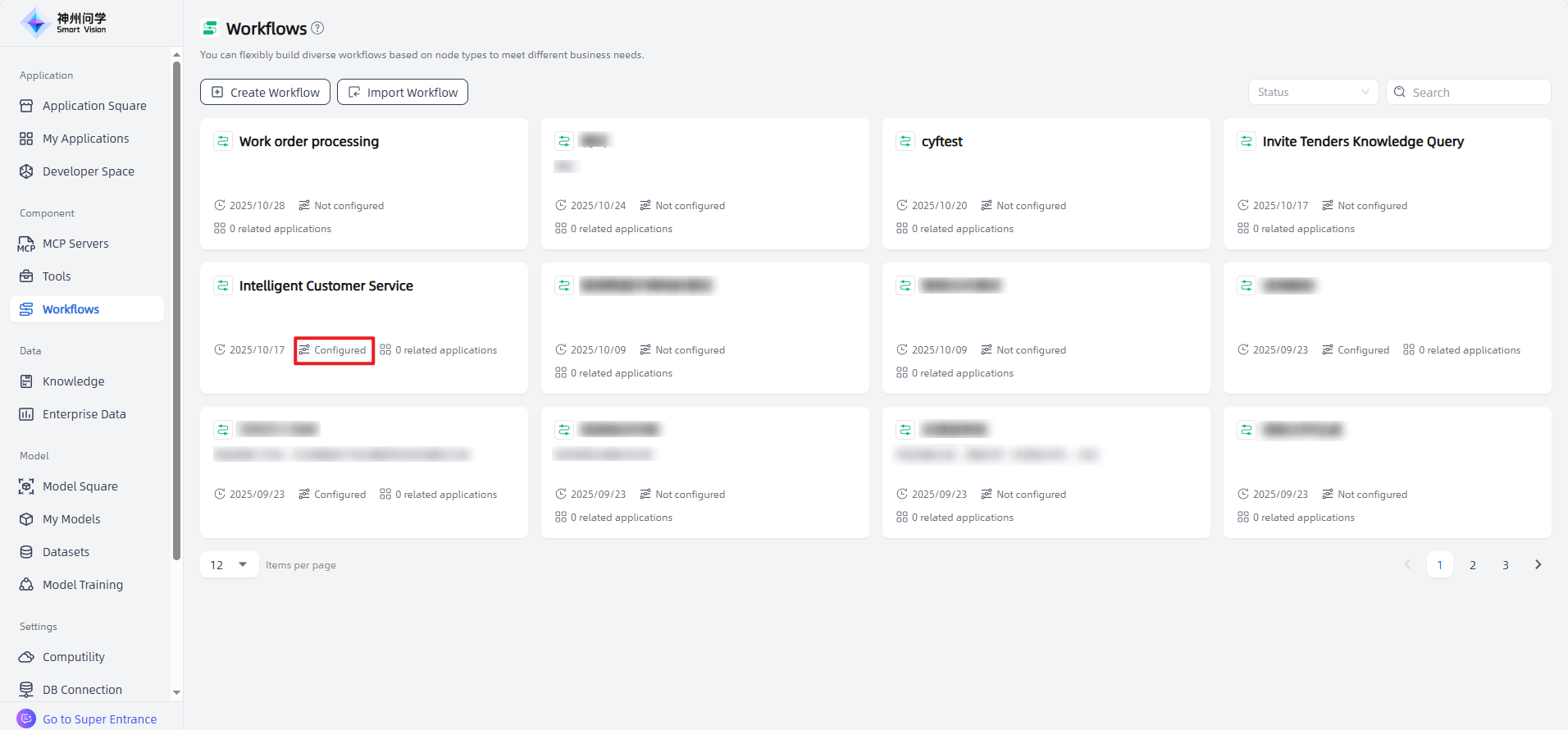

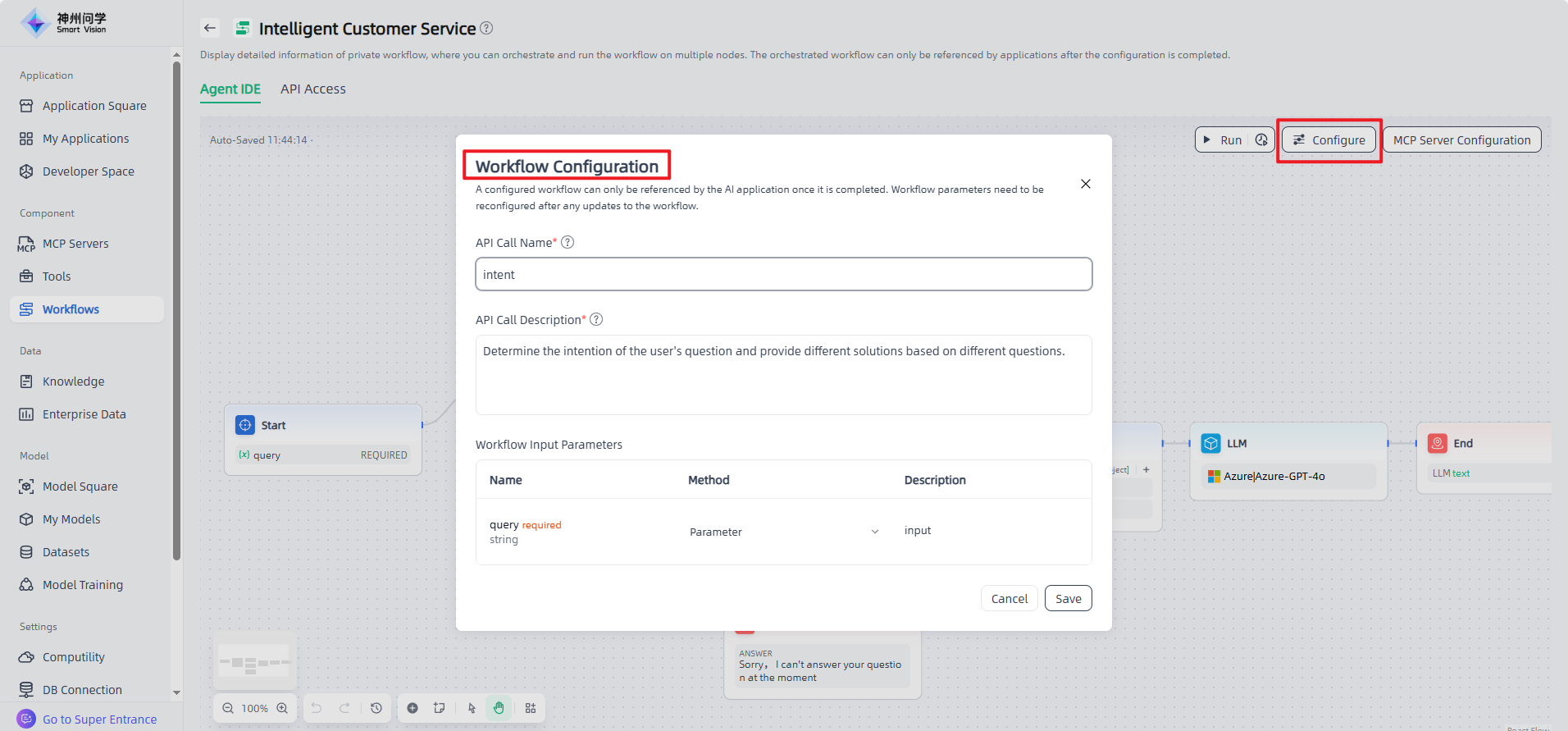

Answer: a. Only configured workflows can be referenced in AI applications. After workflow orchestration is completed, you need to click the "Configure" button at the top right of the canvas to configure the workflow.

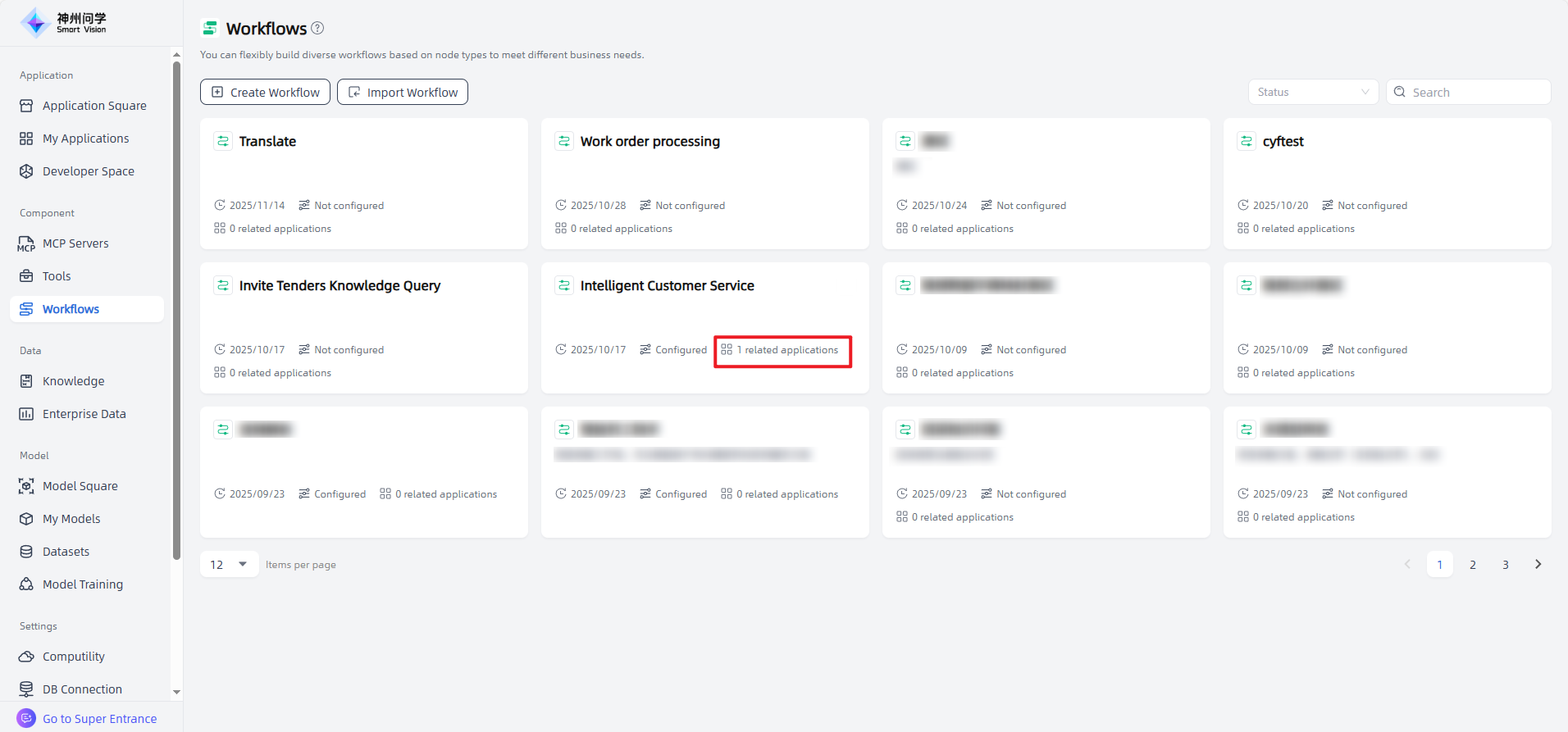

b. Once configured, the workflow status changes to Configured and is displayed on the workflow page. It can then be referenced during AI application orchestration.

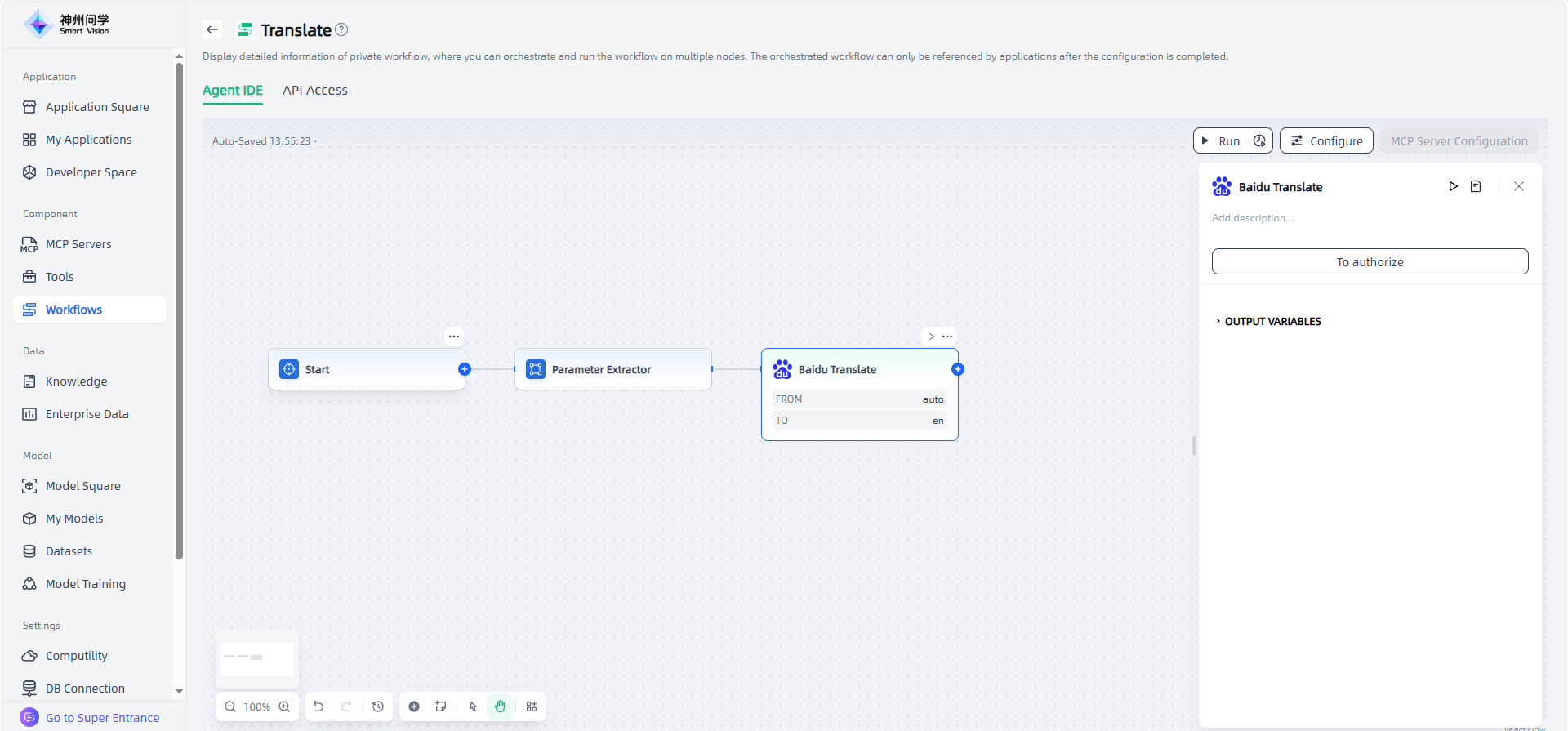

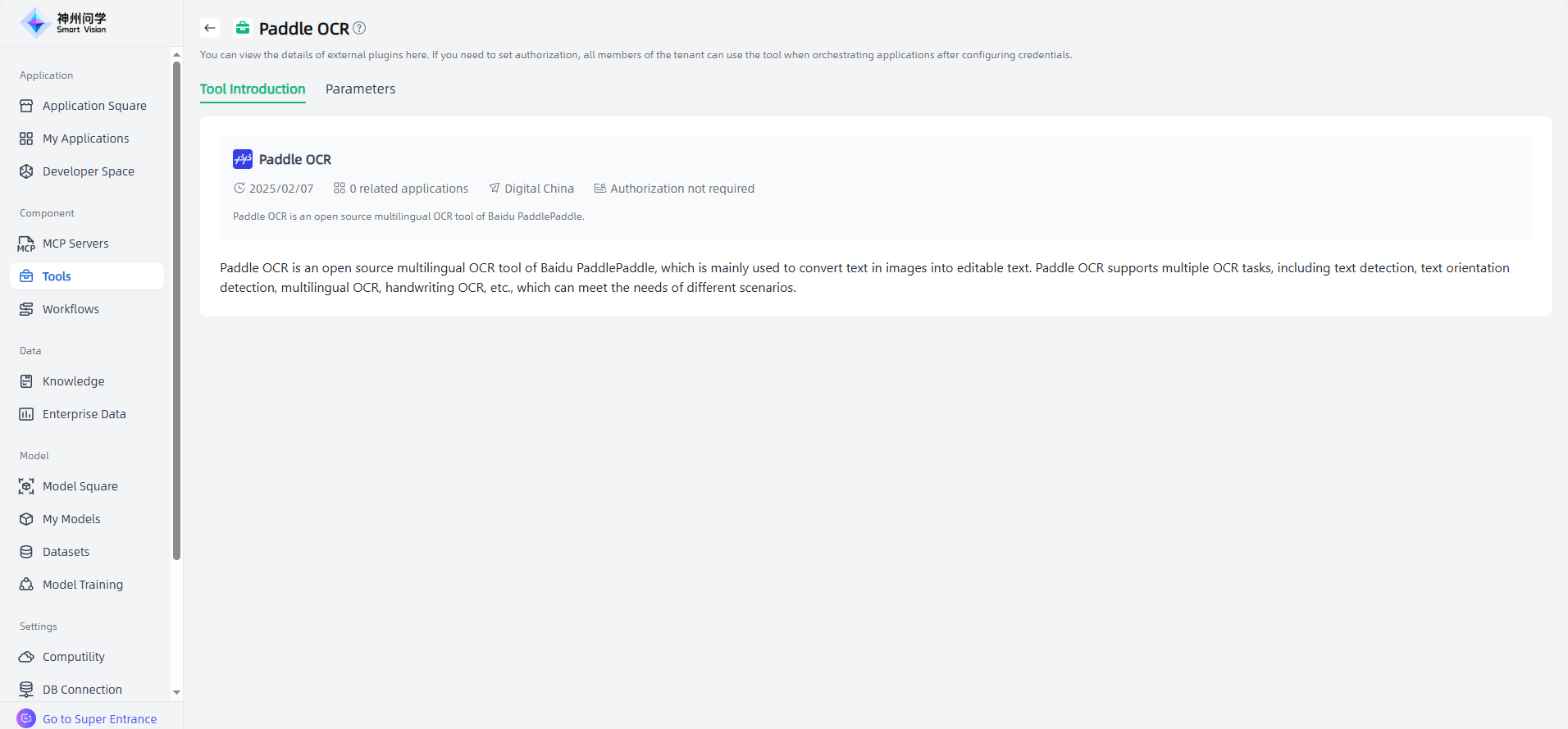

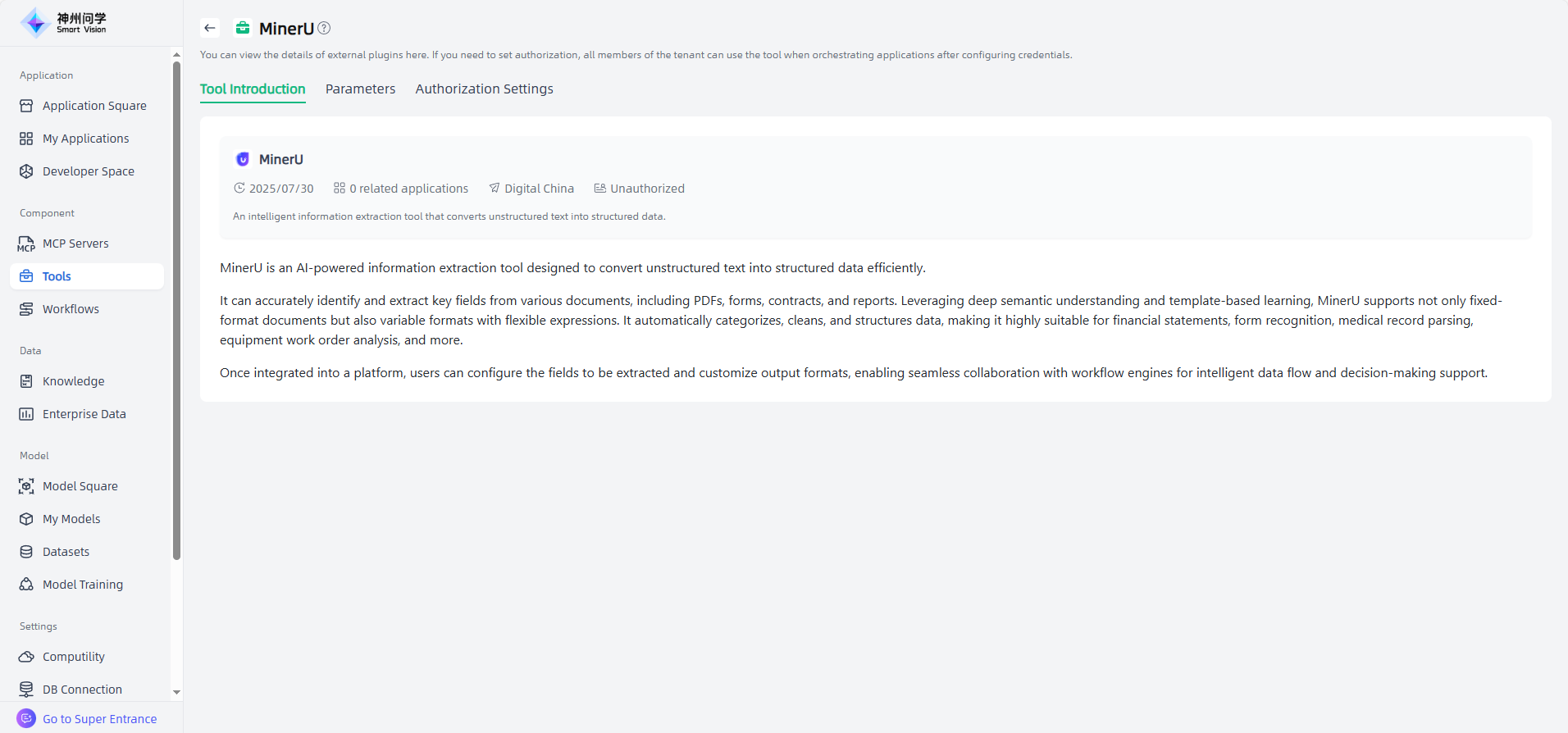

What if external plugins provided in the Tools cannot be used during AI application orchestration?

Answer : Some tools provided by Tools - External Plugins require authorization. You can authorize unauthorized tools in Components - Tools, so that they can be directly used in AI application or workflow orchestration. Additionally, if you haven’t pre-authorized, you can also authorize tools when adding them during application/workflow orchestration.

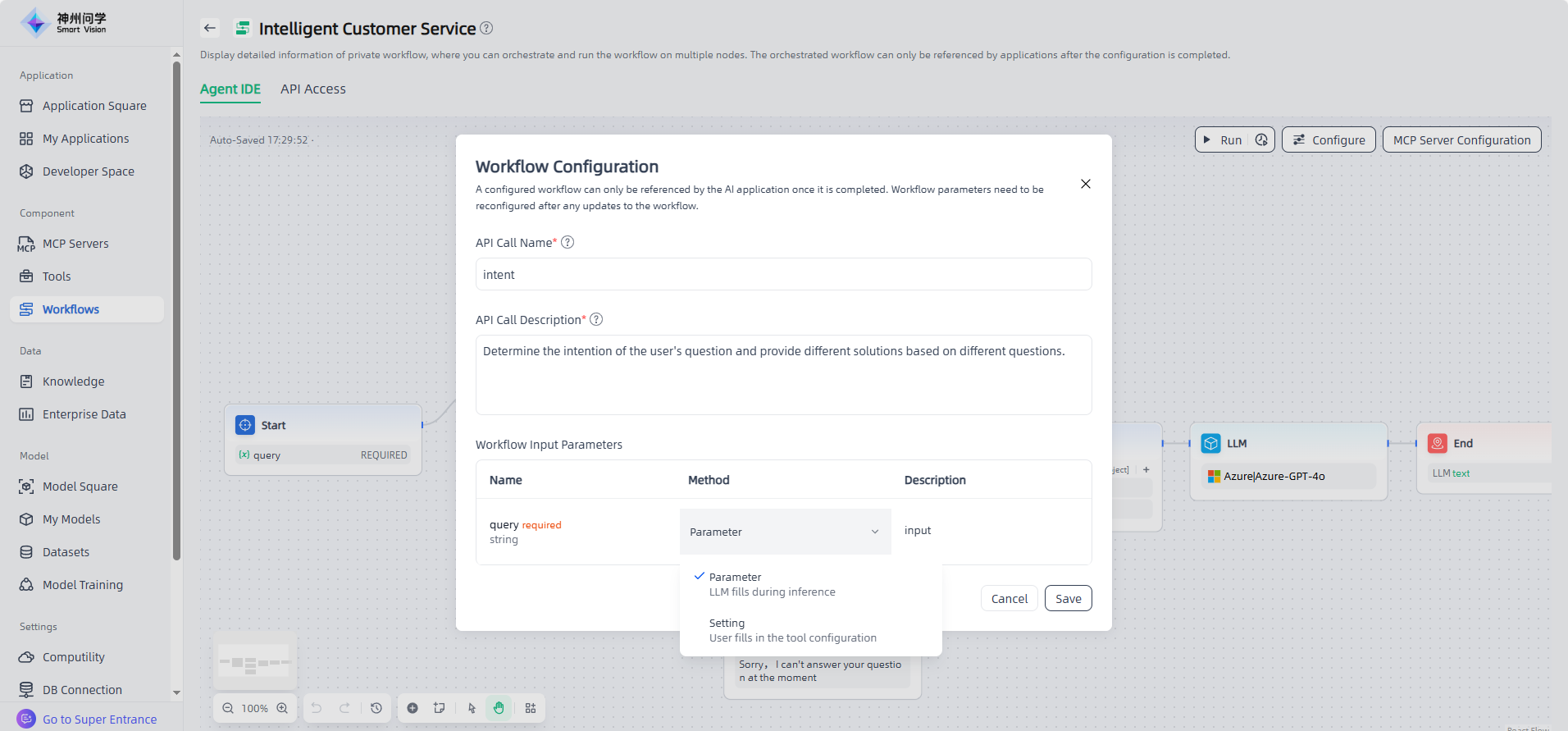

When adding a workflow during application orchestration, why doesn't the workflow update after modification?

Answer: After workflow orchestration and debugging, it must be configured before it can be referenced in AI applications. If a workflow is modified, it needs to be reconfigured to synchronize the changes to the application, ensuring the application works as expected.

Additionally, in the workflows list, each workflow card displays the number of associated applications, providing a comprehensive reference when making modifications or other operations.

Where are the long-term and short-term memory capabilities of the Smart Vision platform reflected? How can the parameters and interfaces be configured?

Answer: Smart Vision supports memory functionality, allowing users to configure both long-term and short-term memory as needed.

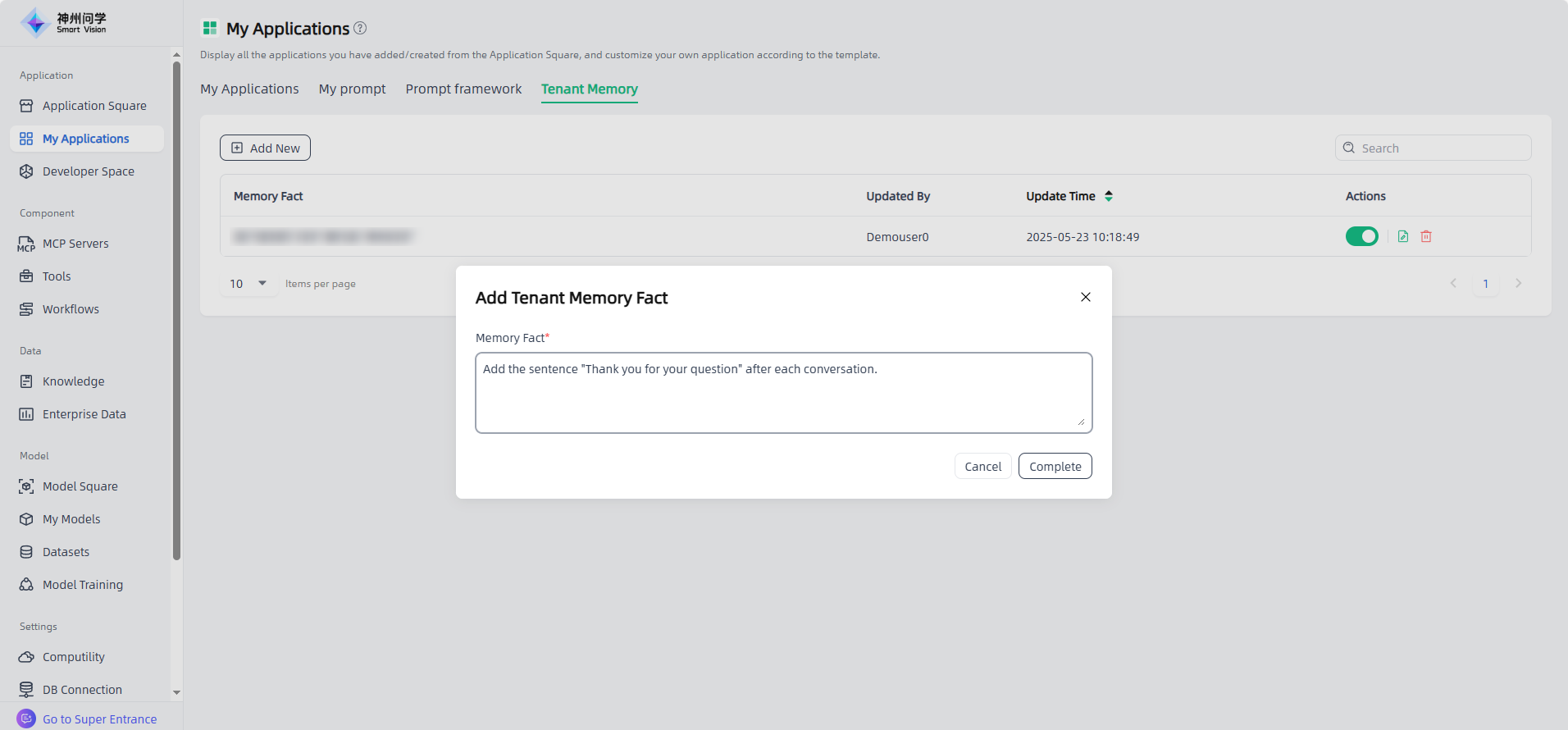

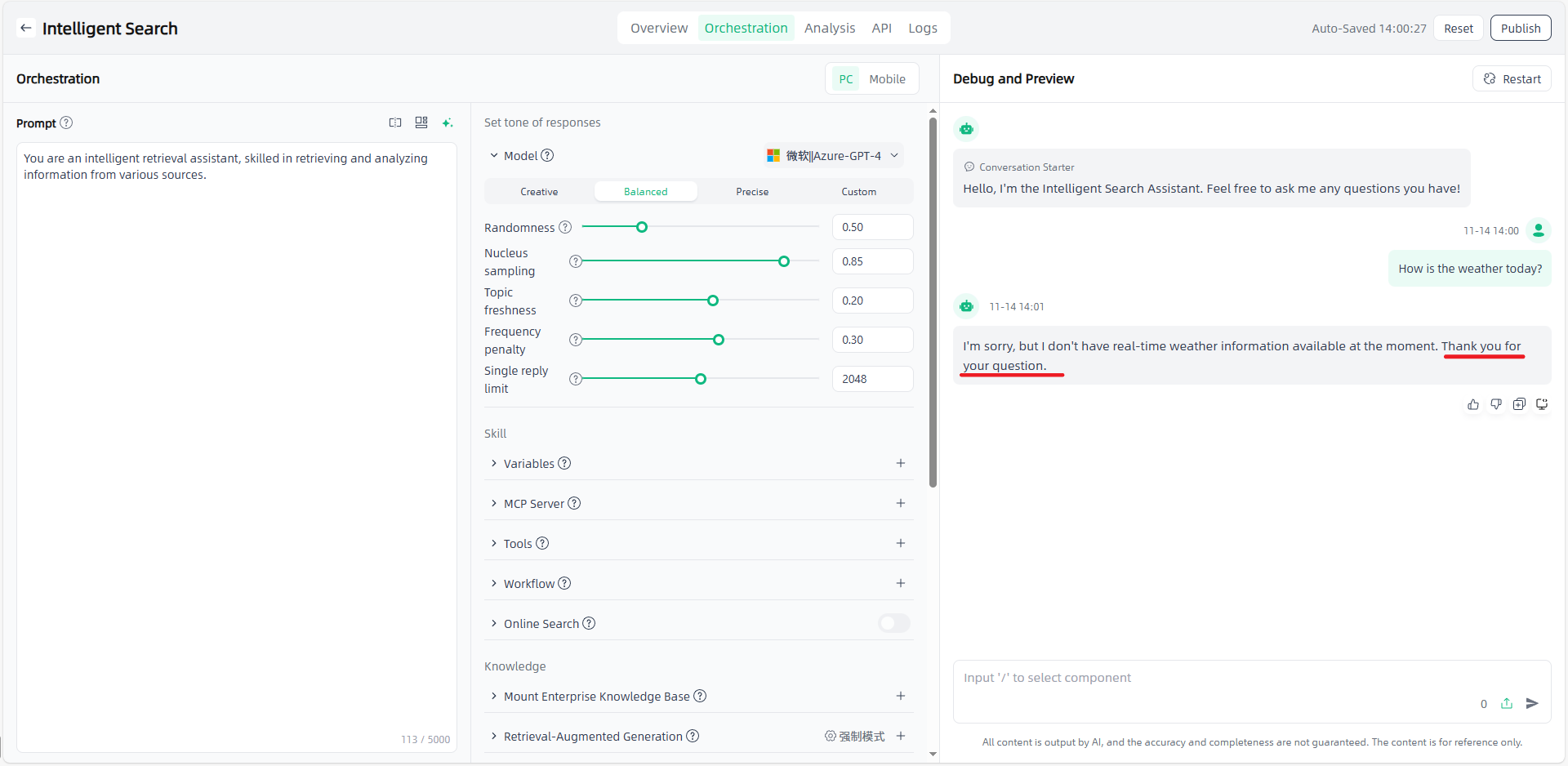

① Tenant Memory: Users can set Tenant factual memory. Once configured and enabled, it will apply to all application sessions under that tenant.

② My Applications – Agent Application: In the orchestration module of the Agent Application, memory can be configured.

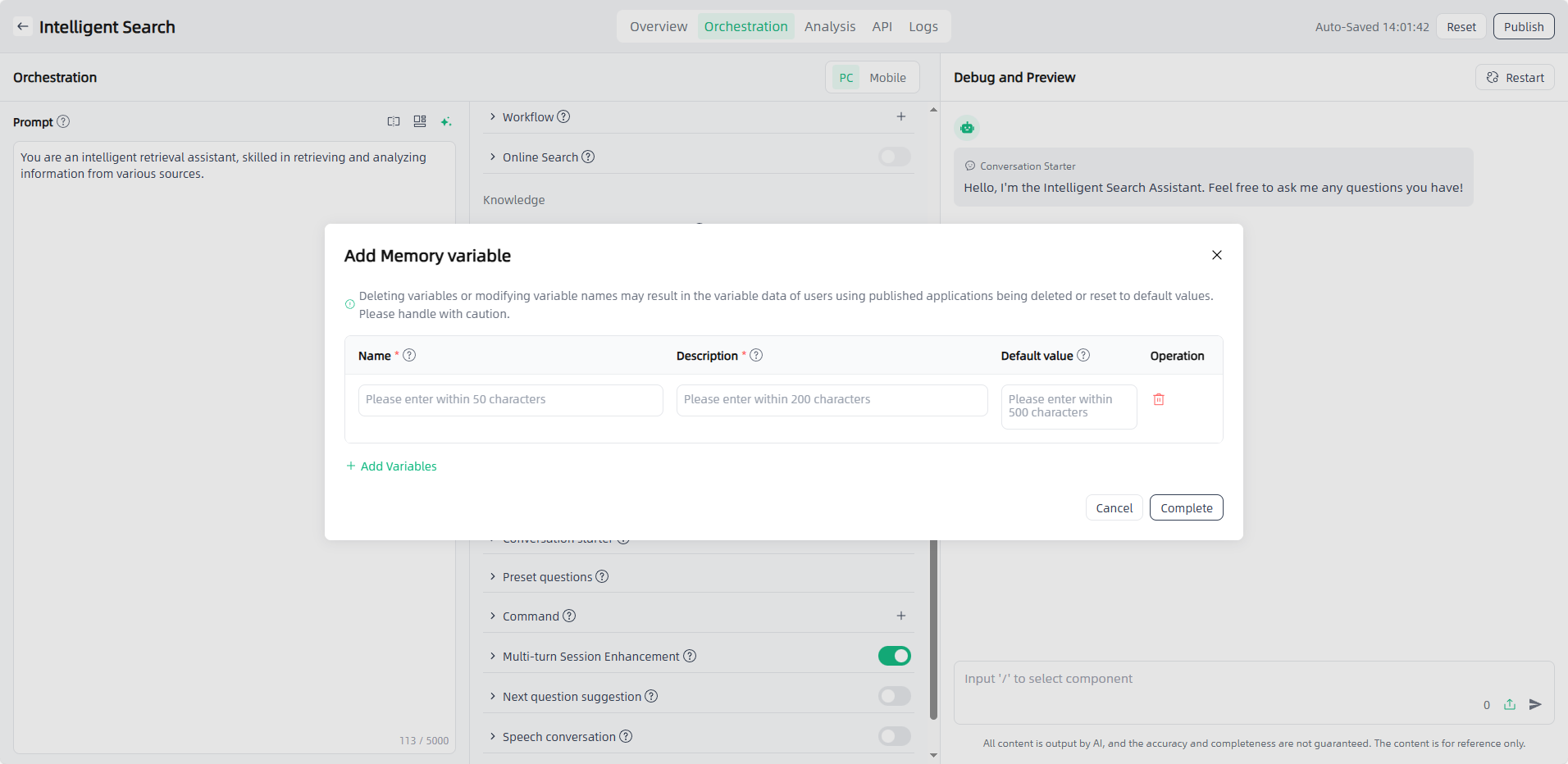

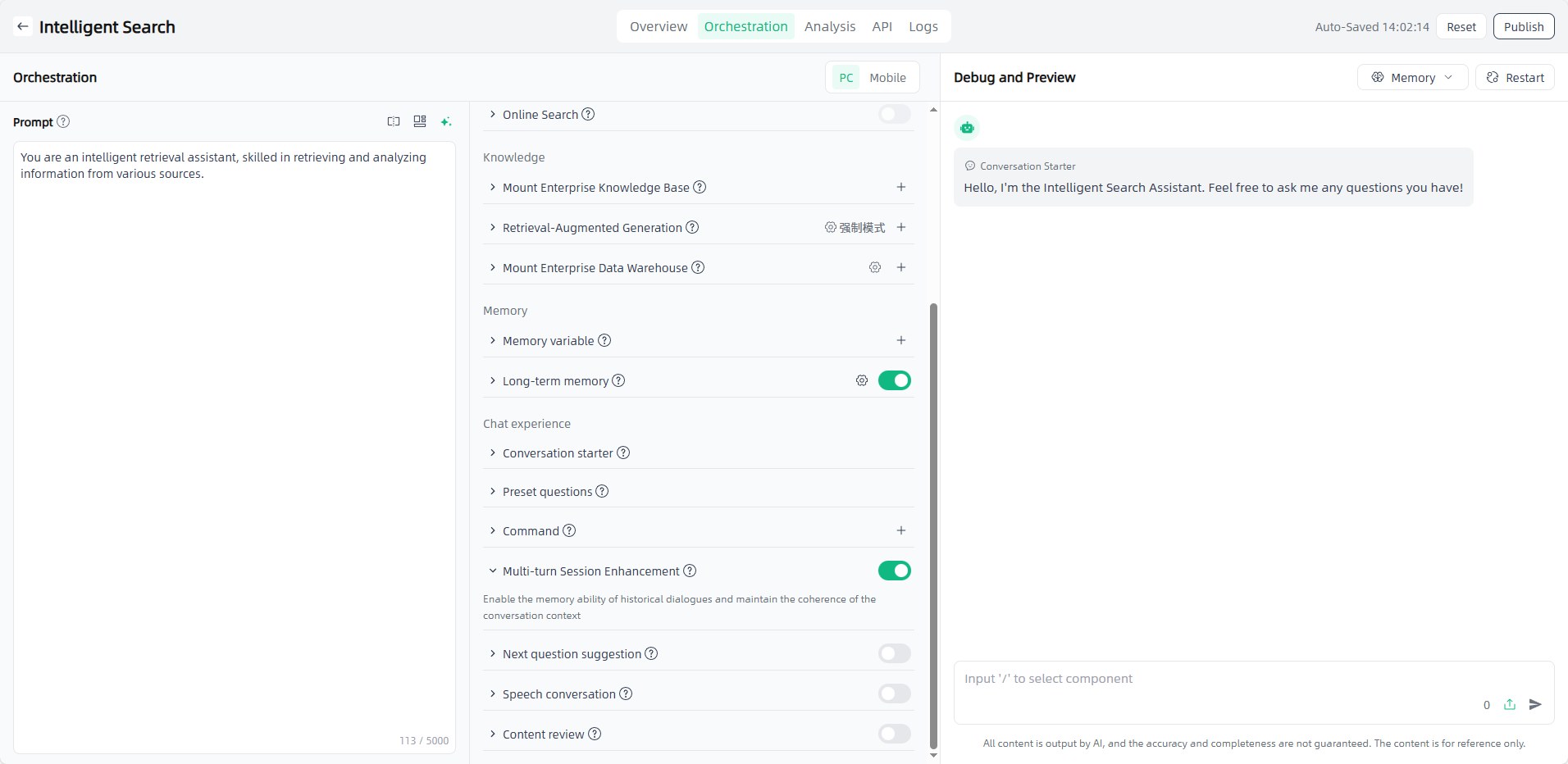

a. Memory Variables: By configuring memory variables, the agent can record specific one-dimensional data from conversations and respond based on stored variables. Click Memory in the upper-right corner to view and manage memory variable information.

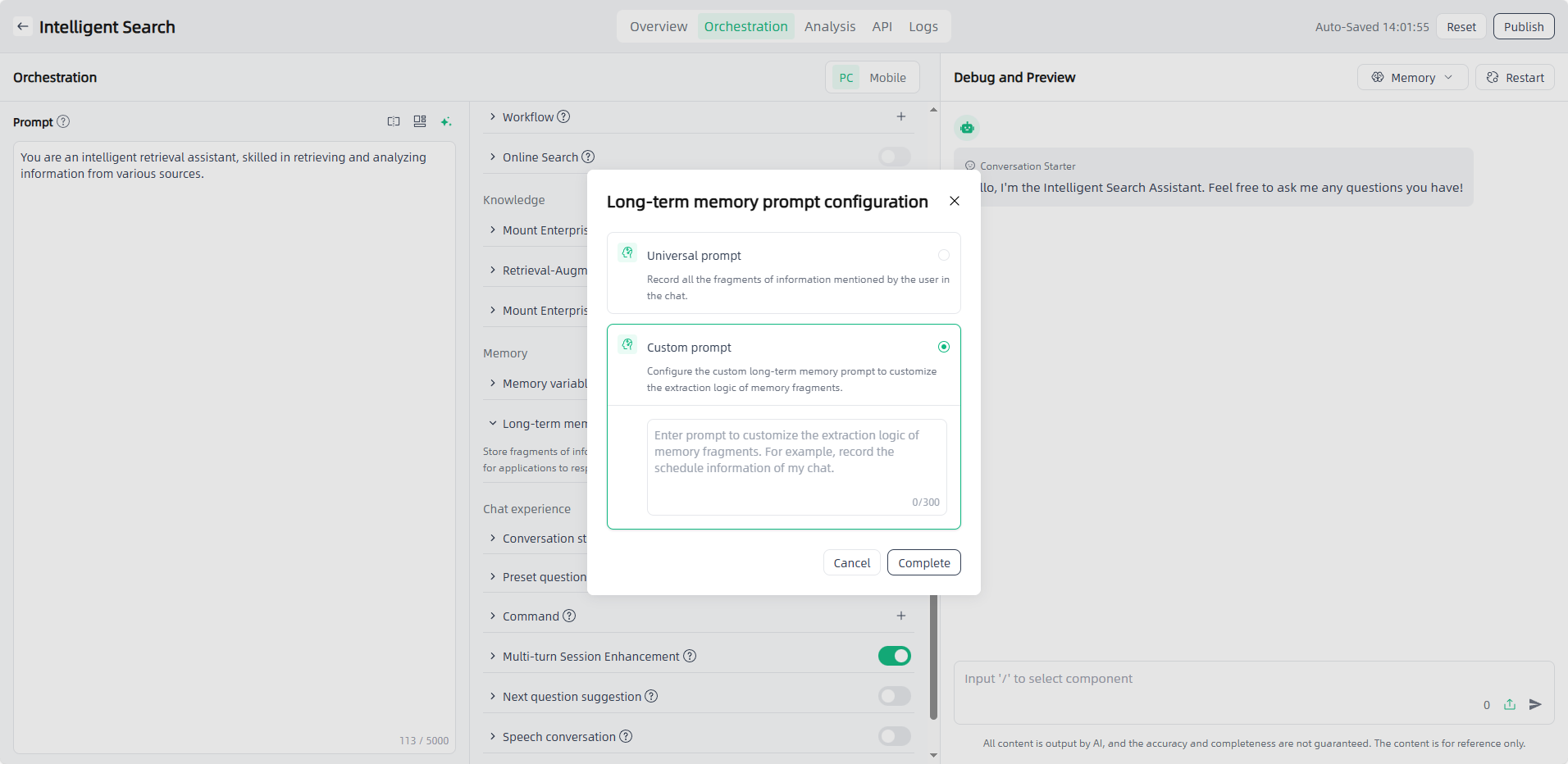

b. Long-term Memory: Enabling the switch and configuring the long-term memory prompt gives the agent long-term memory capability, allowing it to intelligently extract conversation facts and apply them in subsequent sessions to ensure continuity. You can view and manage extracted long-term memory fragments by clicking Memory in the upper-right corner.

c. Multi-turn Session Enhancement: Enabling the Multi-turn Session Enhancement feature allows the application to retain historical conversation context for coherent dialogue.

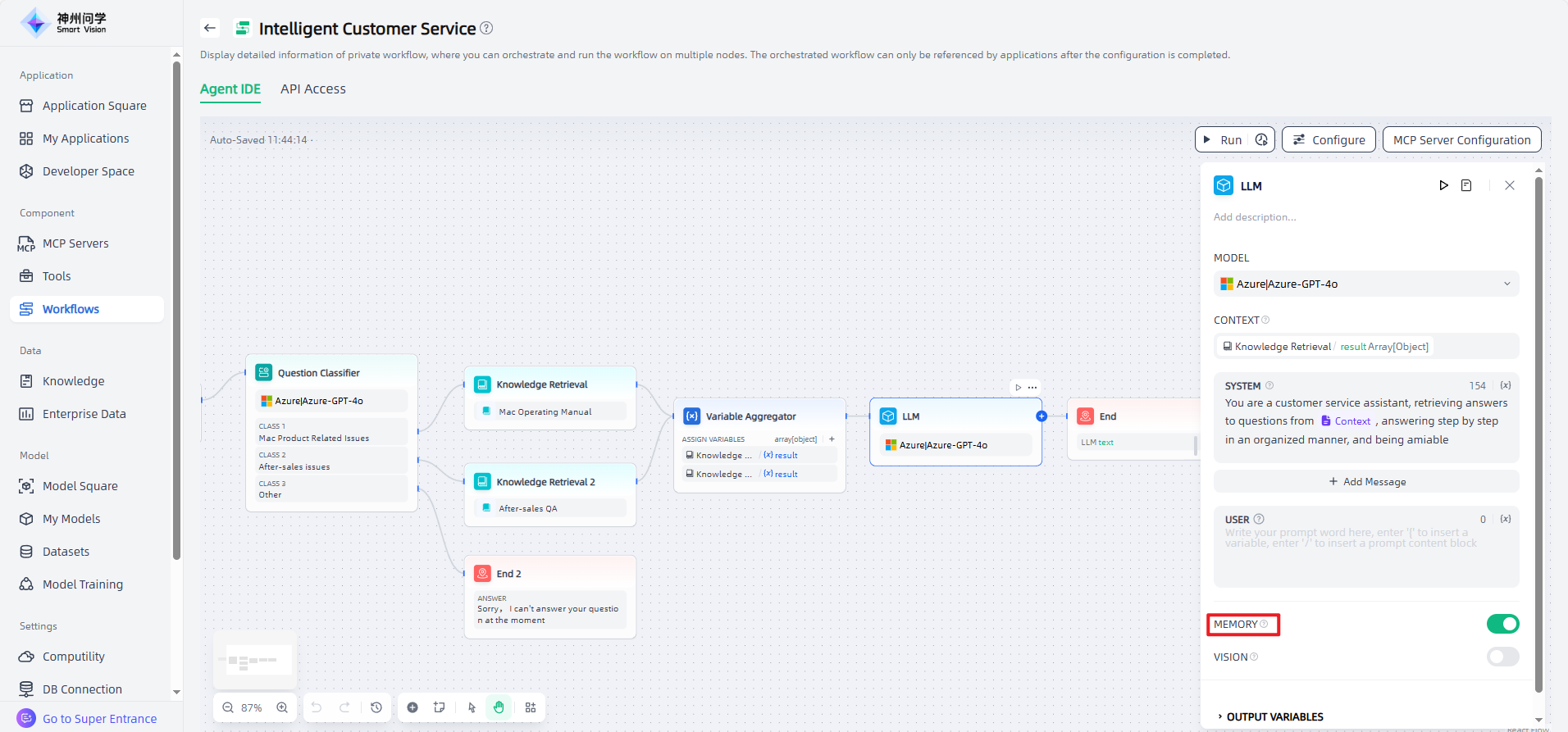

③ Workflows Management: In Components – Workflows, you can add various nodes. The LLM node is the core node used for invoking large language models to answer questions or process natural language. Memory can be enabled and configured in this node via Memory Variables.

Why doesn’t uploading a file in an Agent application chat window automatically generate a summary like in Enhanced Application?

Answer: Unlike Enhanced Applications, where uploaded files are automatically understood and summarized, files uploaded in Agent Applications need to be combined with tools or workflows invocation, so that the uploaded files can be used as contextual input for processing.

Can the Document Extractor node in a workflow process PDF scans?

Answer: The Document Extractor node supports the following file types: txt, markdown, pdf, html, xlsx, xls, docx, csv, eml, msg, pptx, xml, epub, ppt, md, htm.

However, supported PDFs do not include scanned documents. Scanned PDFs can be processed using the Paddle OCR or MinerU tools available under Tools – External Plugins.

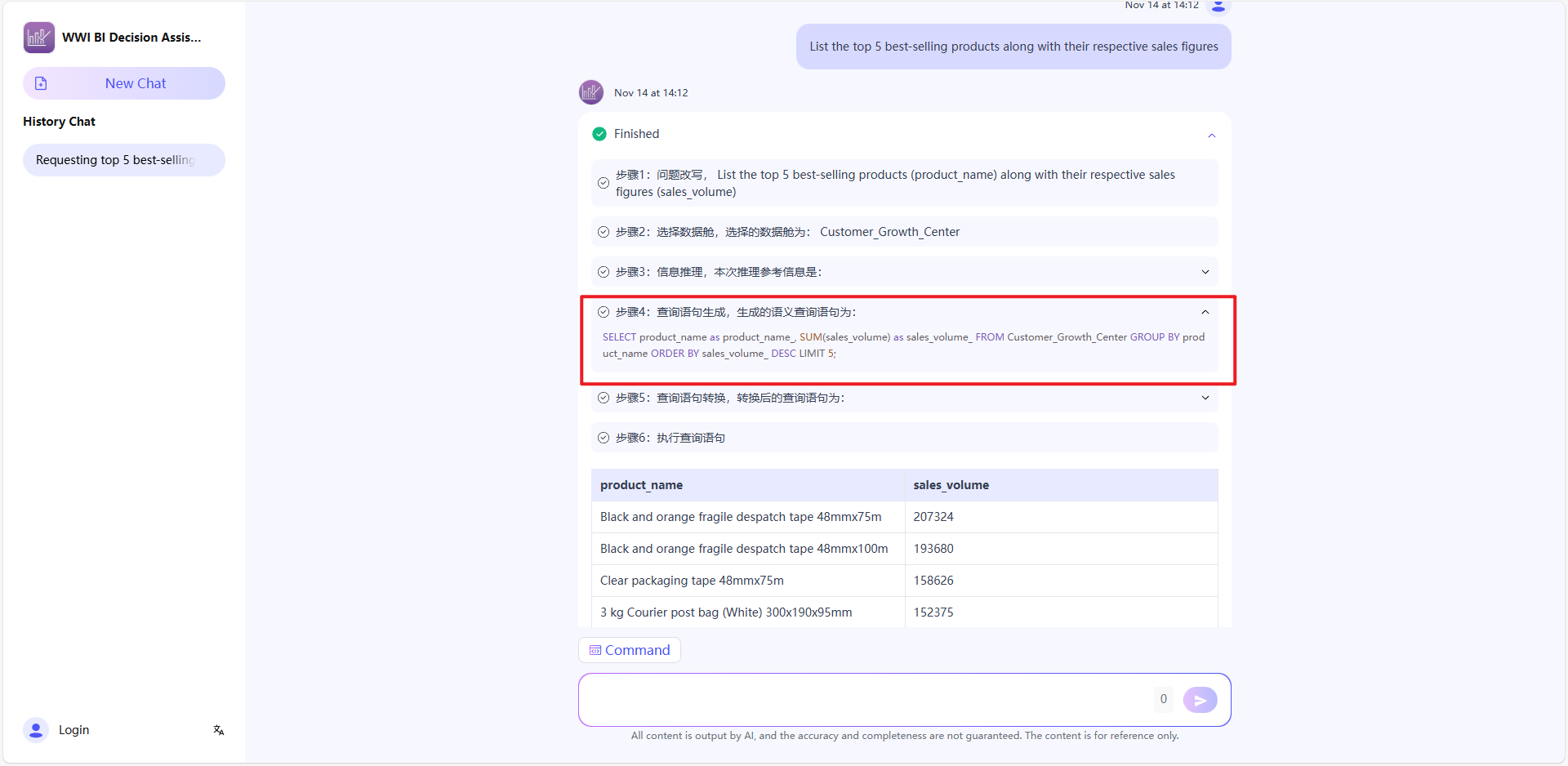

When enterprise data produces inaccurate answers in actual use, how can the issue be located and adjusted?

Answer: Review the query results through Semantic SQL (see Step 4). Adjust the semantic model if necessary—for instance, if the Semantic SQL doesn’t match the user’s intent, check whether the corresponding dimensions, metrics, or term definitions are inaccurate.

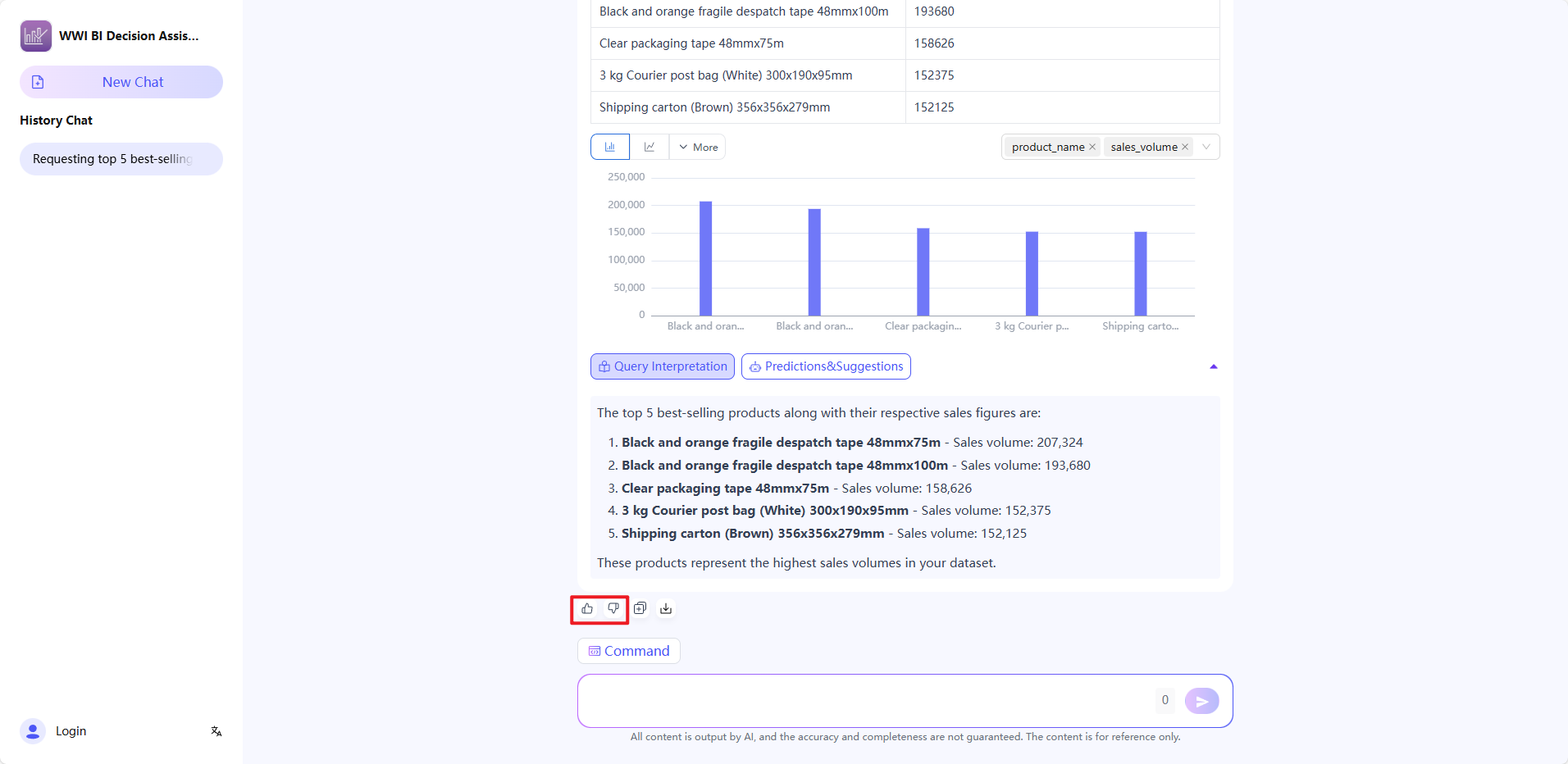

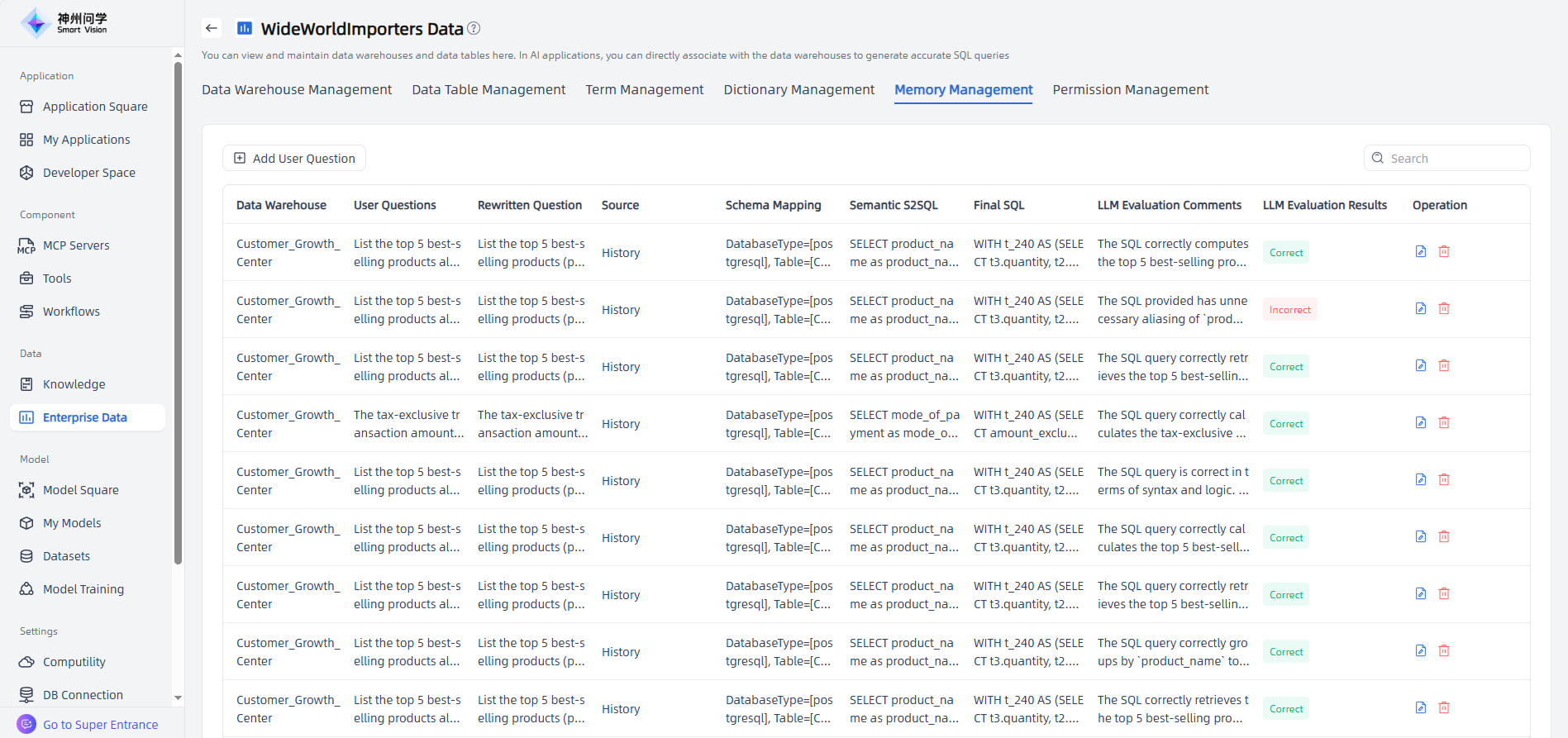

Can users provide feedback on the accuracy of enterprise data Q&A results?

Answer: Yes. Users can choose like or dislike an answer, and when disliking, they can choose from preset options or enter custom feedback. Admin can view user feedback on the Memory Management page to evaluate and improve query accuracy.

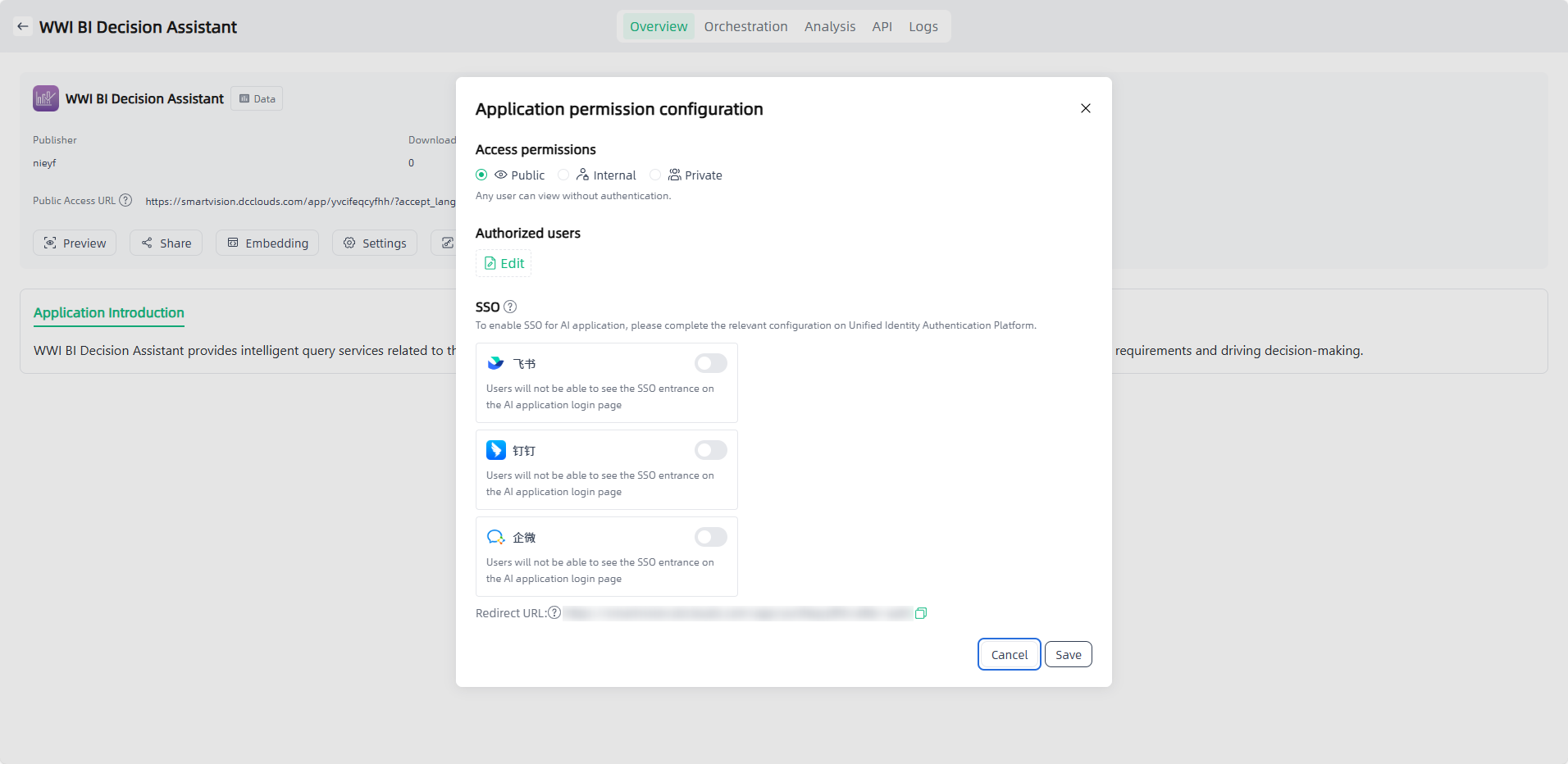

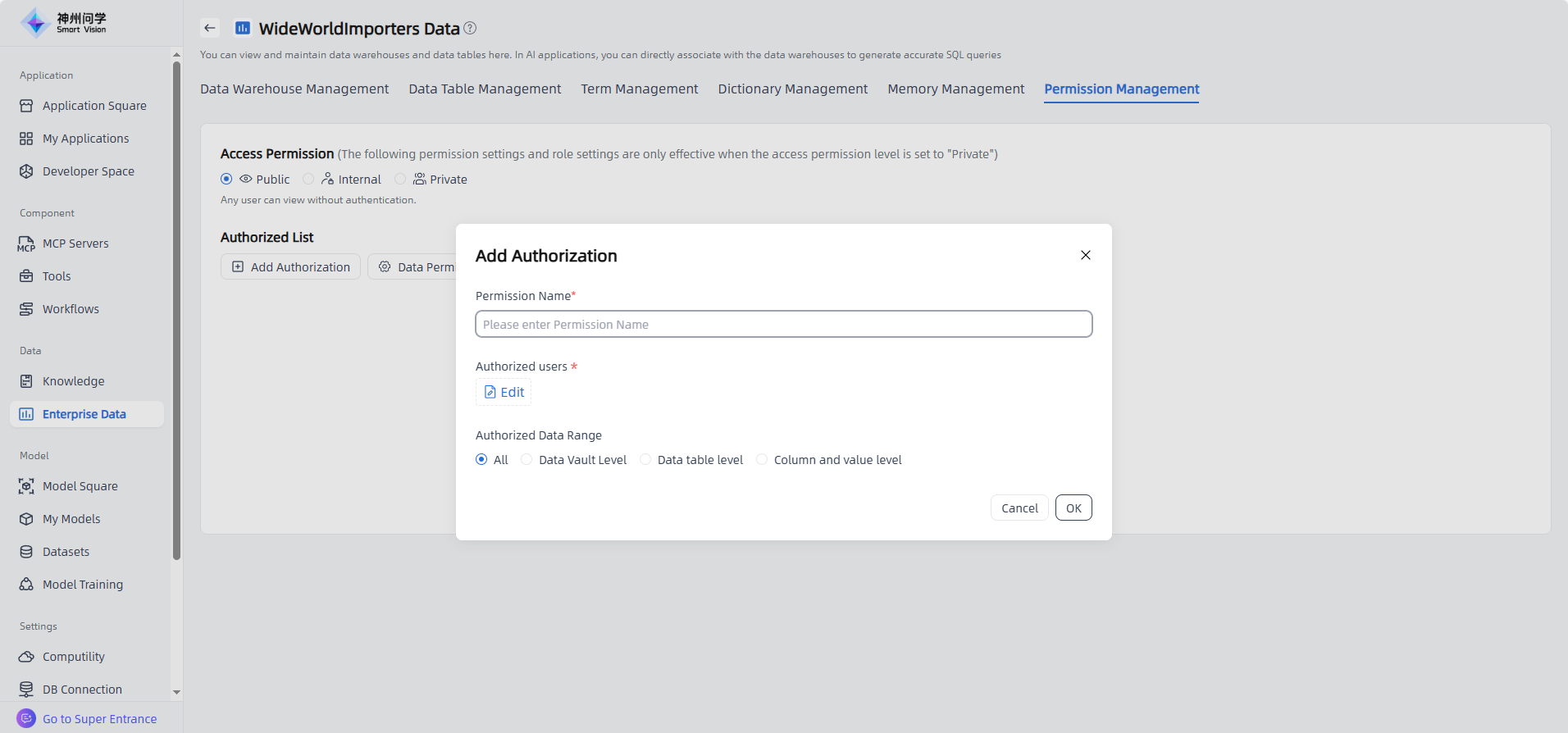

How is enterprise data access controlled, and how is data security ensured?

Answer: Data application does not store any data from connected databases; it only performs queries. In addition, Smart Vision provides comprehensive application permission and data permission controls.

Application permission: Administrators can authorize specific users or departments to access applications and configure Feishu SSO for secure access.

Data Permission Control: Through a three-tier control system consisting of domain, dimension & metric, and row-level permission, Smart Vision achieves refined data access management from table level, column level, to row level, balancing data security and business collaboration efficiency.

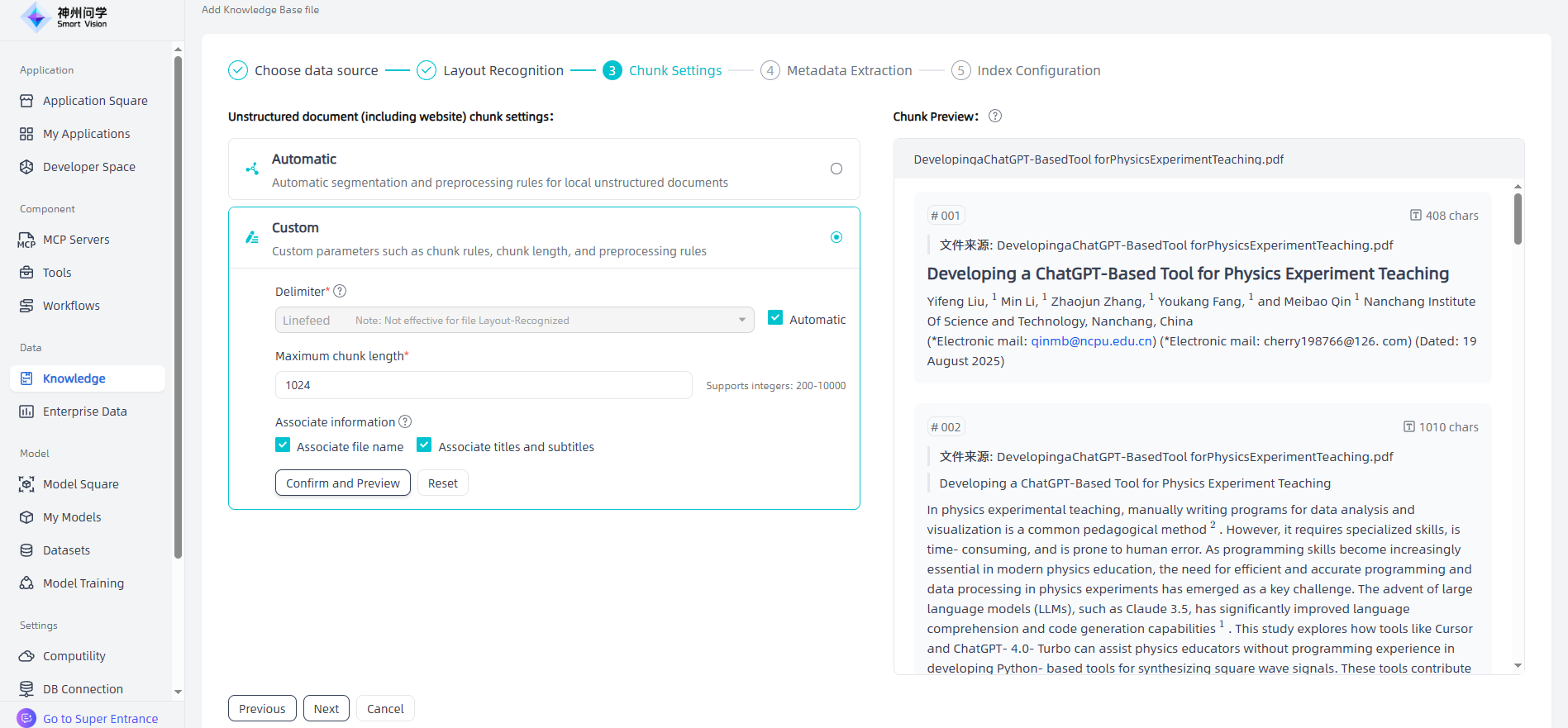

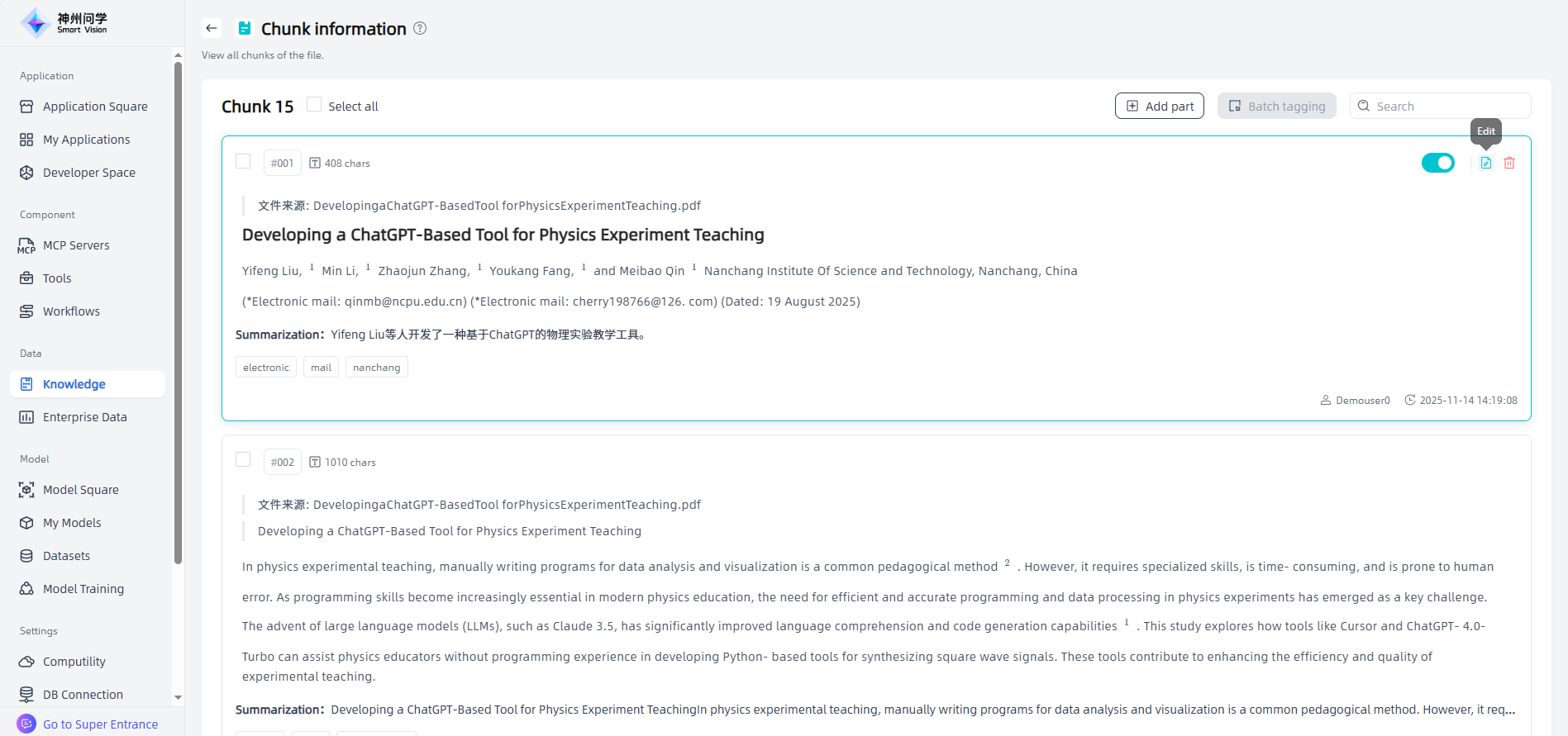

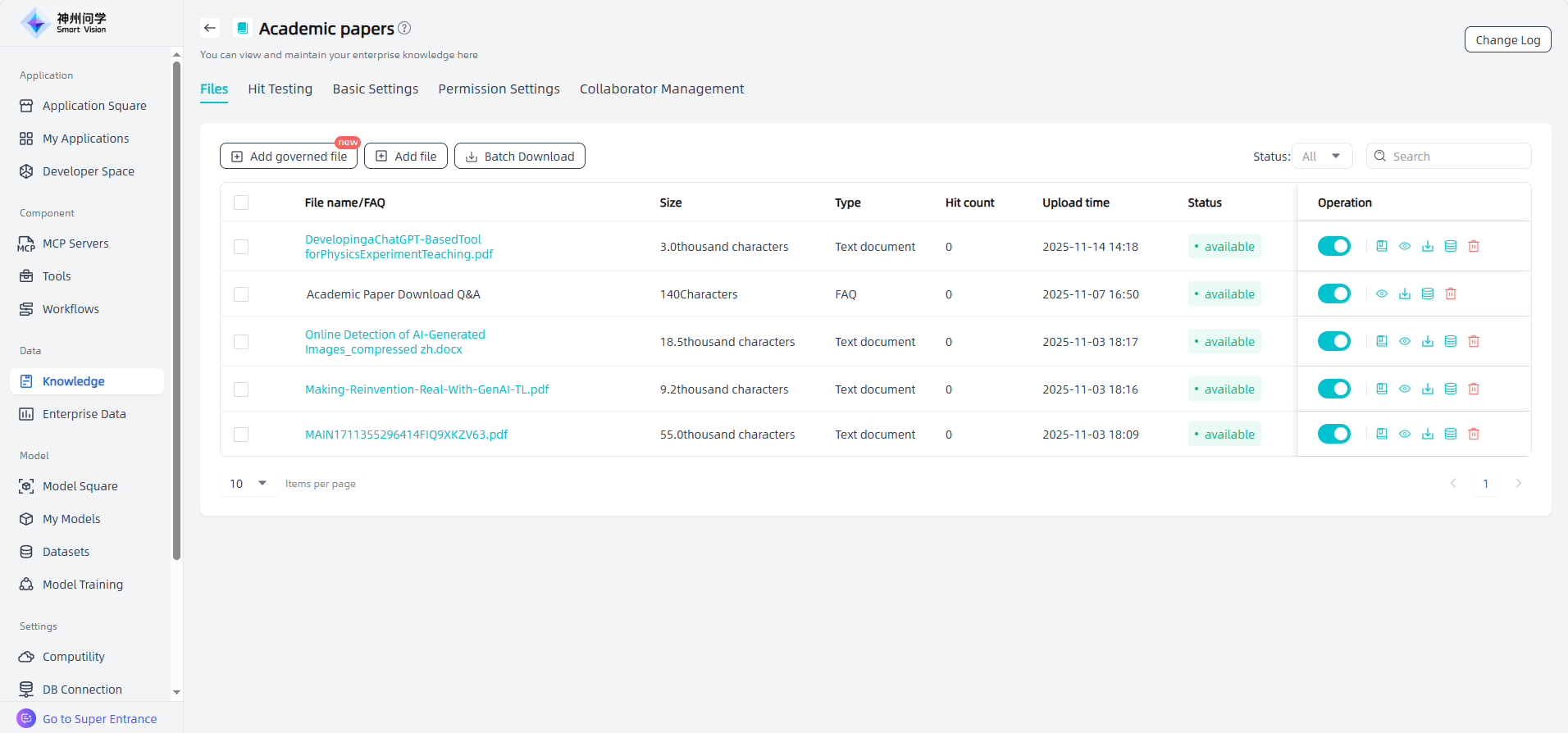

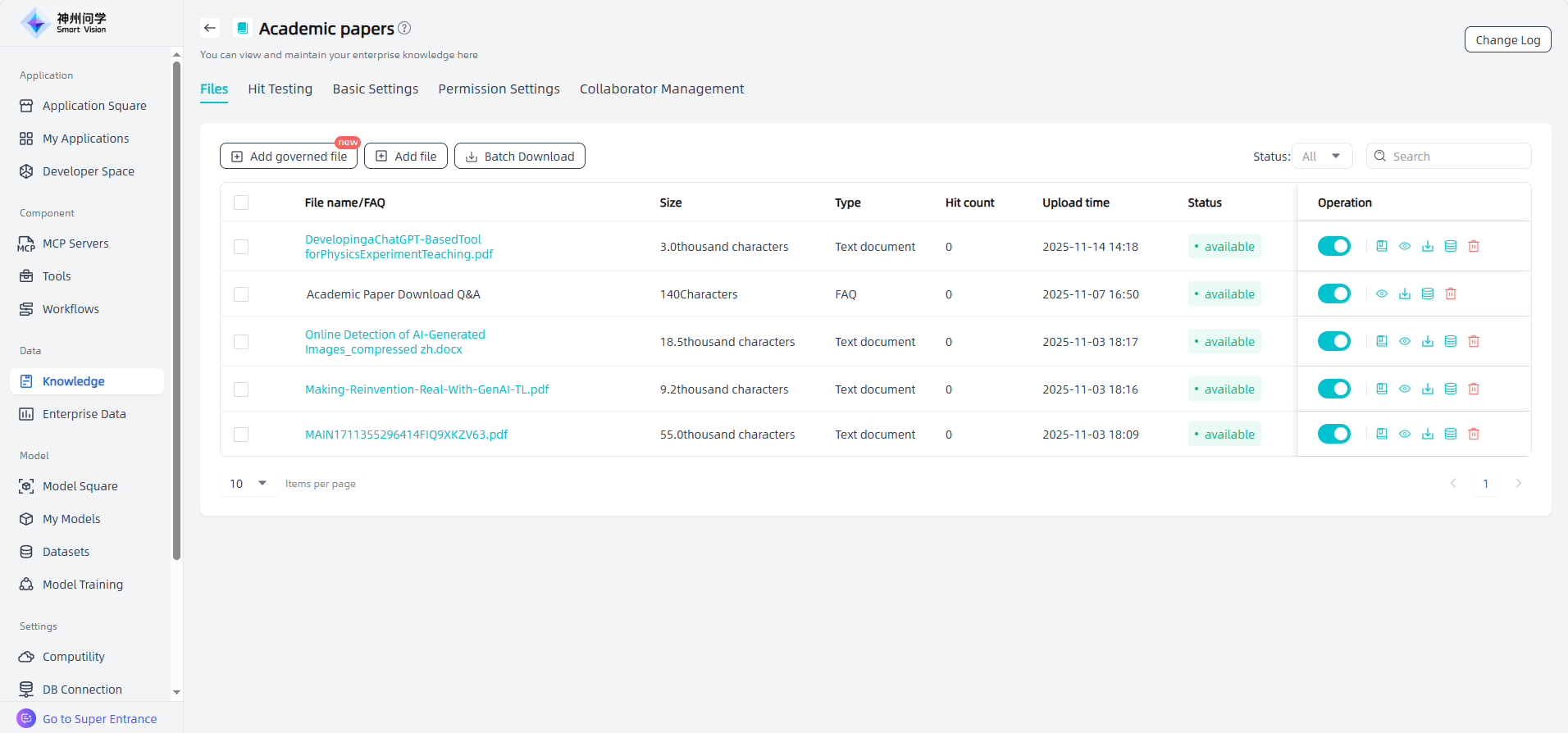

How can I set document Chunk rules for files uploaded to the Enterprise Knowledge Base? Can I customize the adjustments?

Answer: The Enterprise Knowledge Base provides a knowledge governance pipeline, supporting step-by-step governance or batch automated processing (Pipeline) for uploaded files. Users can select appropriate chunk rules and configure parameters based on document characteristics.

If the chunk rules set in the configuration do not meet specific governance needs for certain documents, manual governance is supported to adjust, delete, or add segments as required.

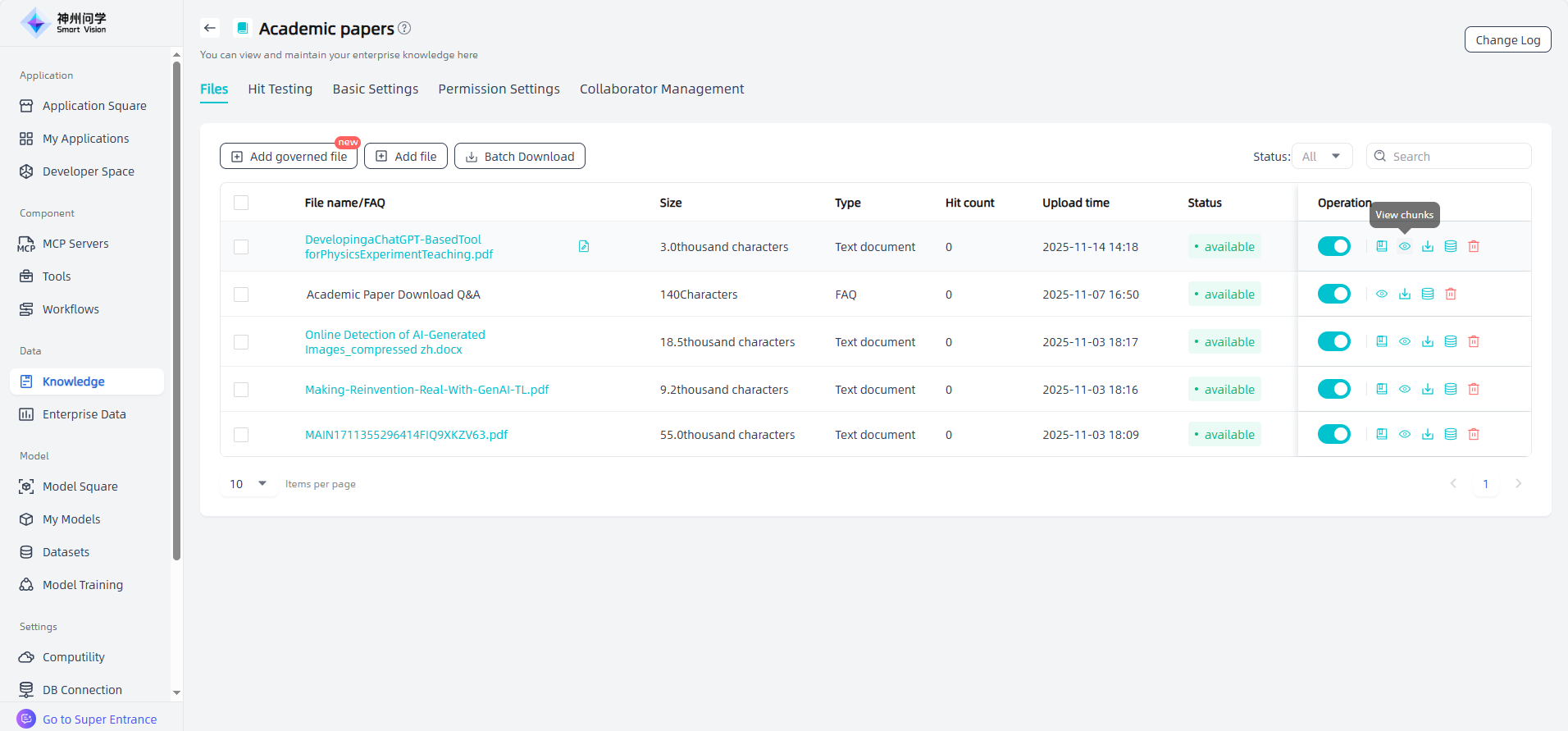

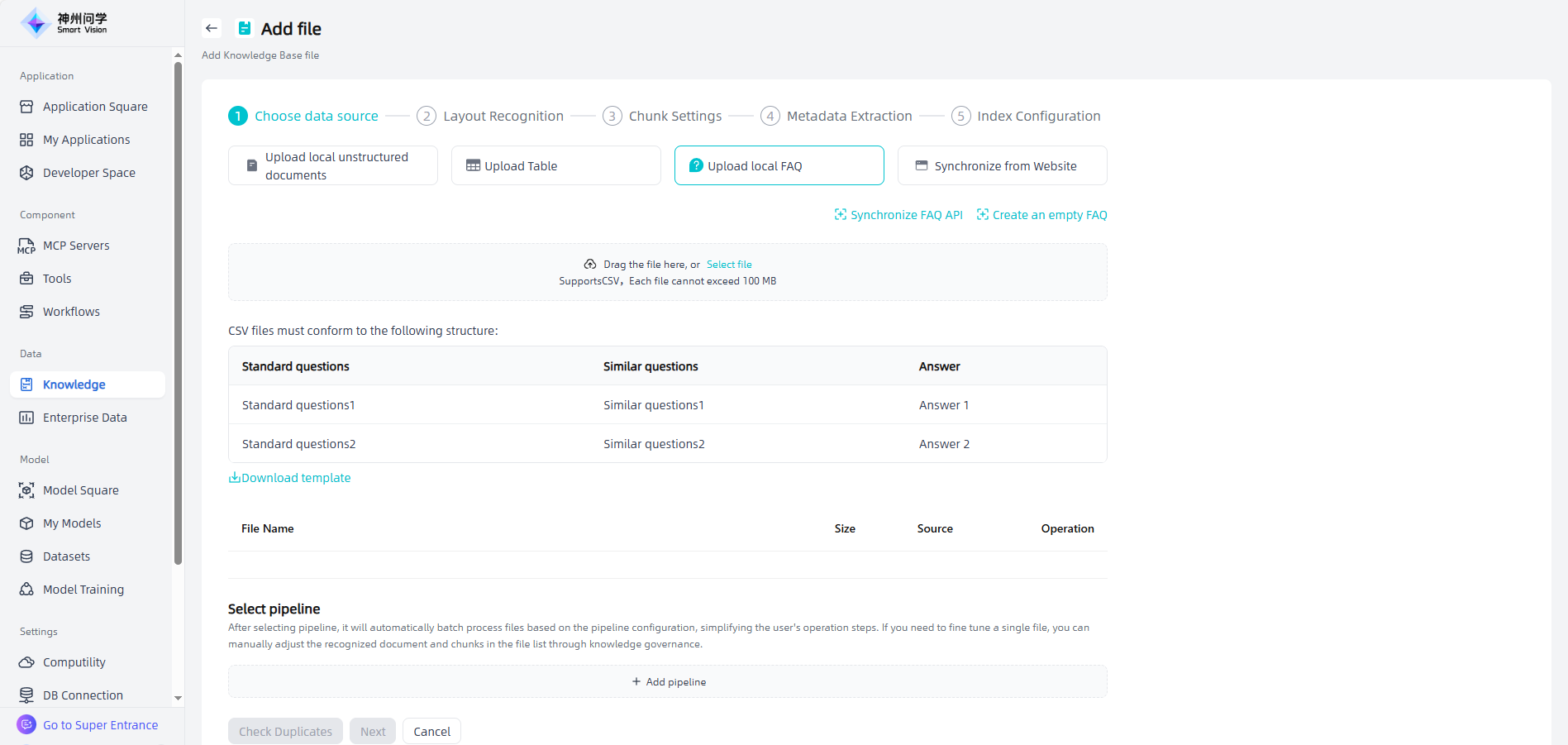

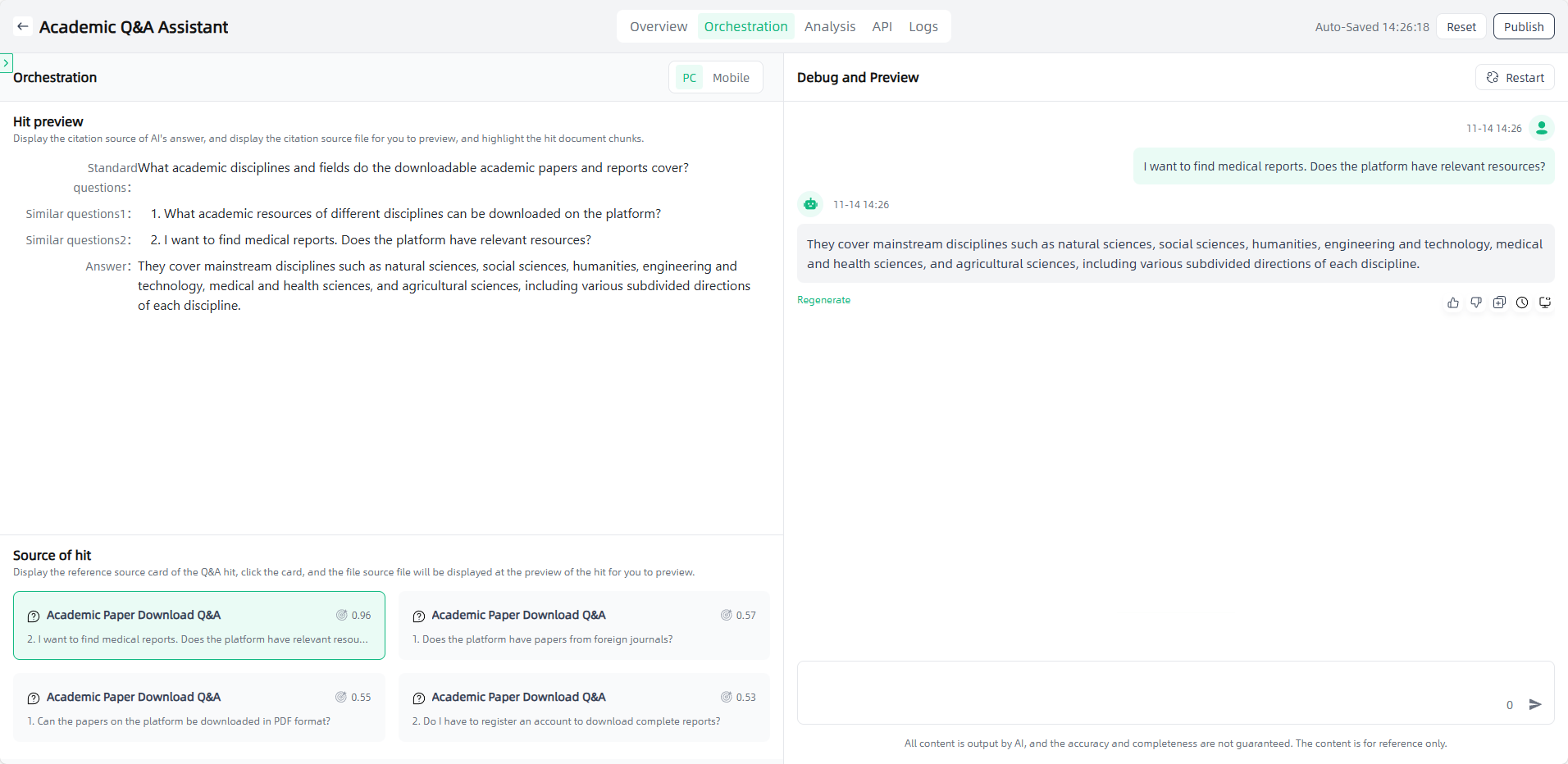

How can I configure Standard Q&A separately so that the AI application can perform intent recognition to call the standard answers?

Answer:

a. Each Enterprise Knowledge Base supports adding multiple files of different formats and sources. Users can upload local FAQ(created using templates, Synchronize FAQ API, or create an empty FAQ for later question-answer pair additions). The similar questions feature allows one answer to correspond to multiple similar questions.

b. After mounting the Enterprise Knowledge Base in an AI application, the model performs knowledge retrieval based on user queries. The retrieved results include both vector segments and Q&A datasets (distinguished by type) and are ranked by similarity scores.

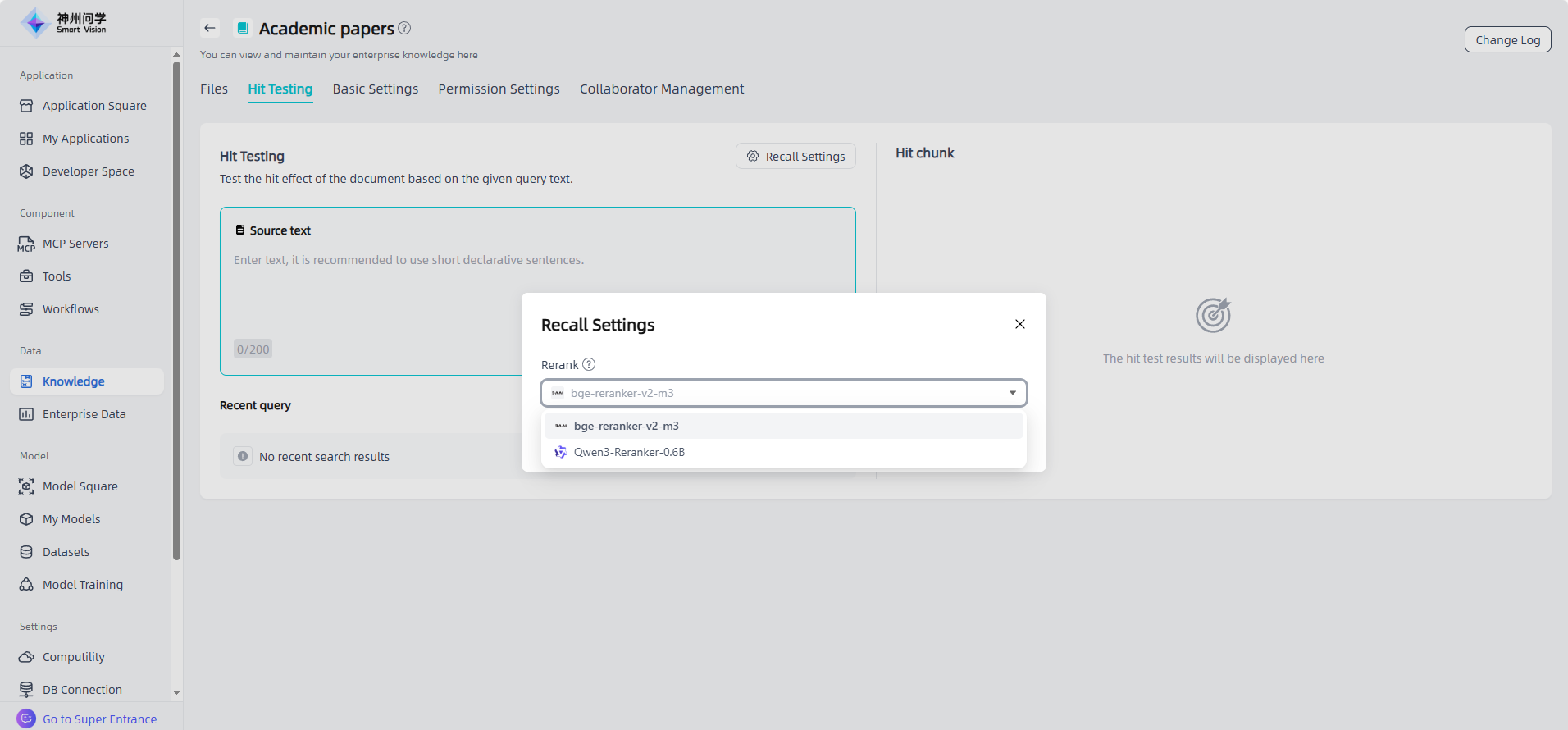

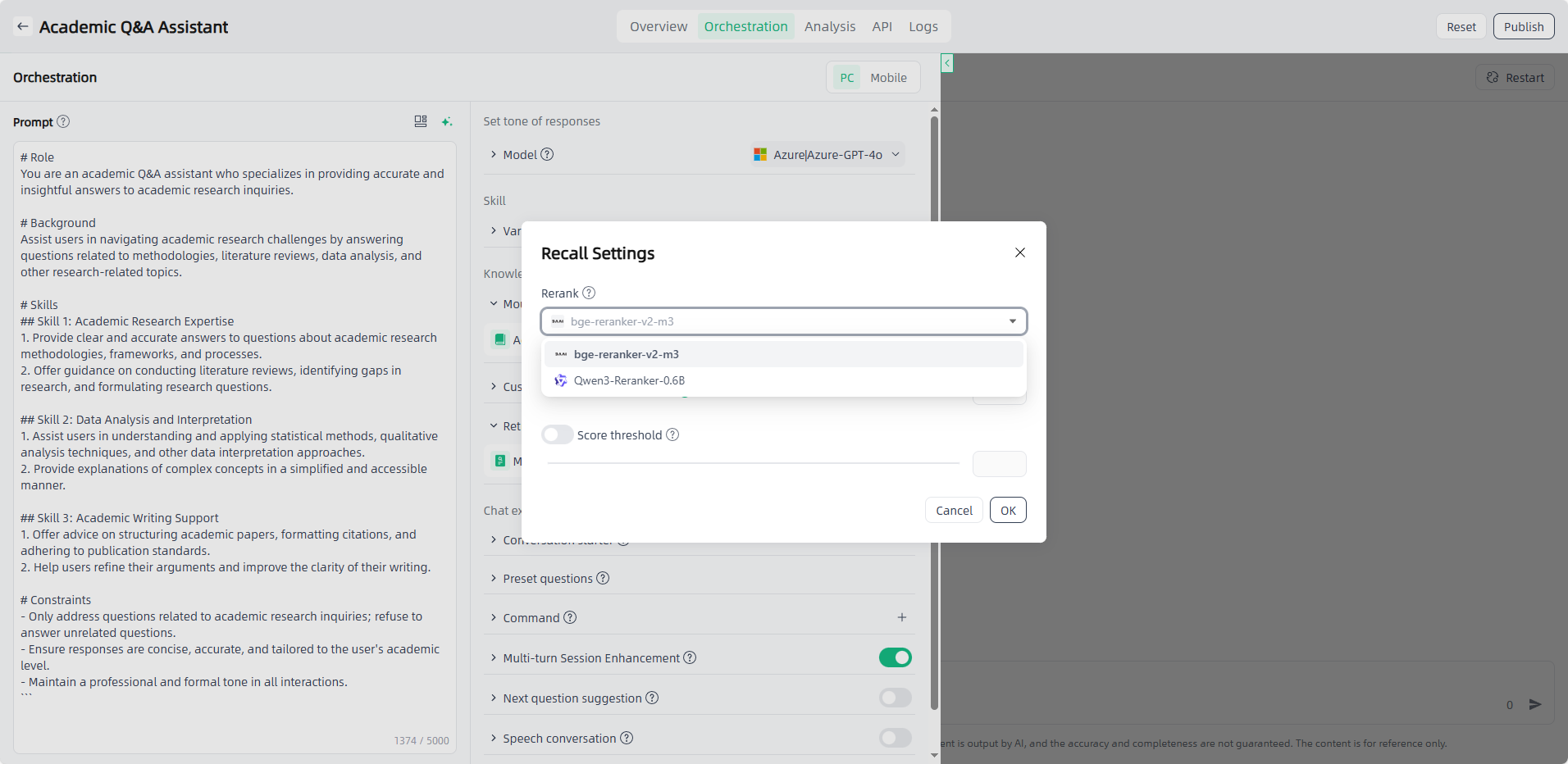

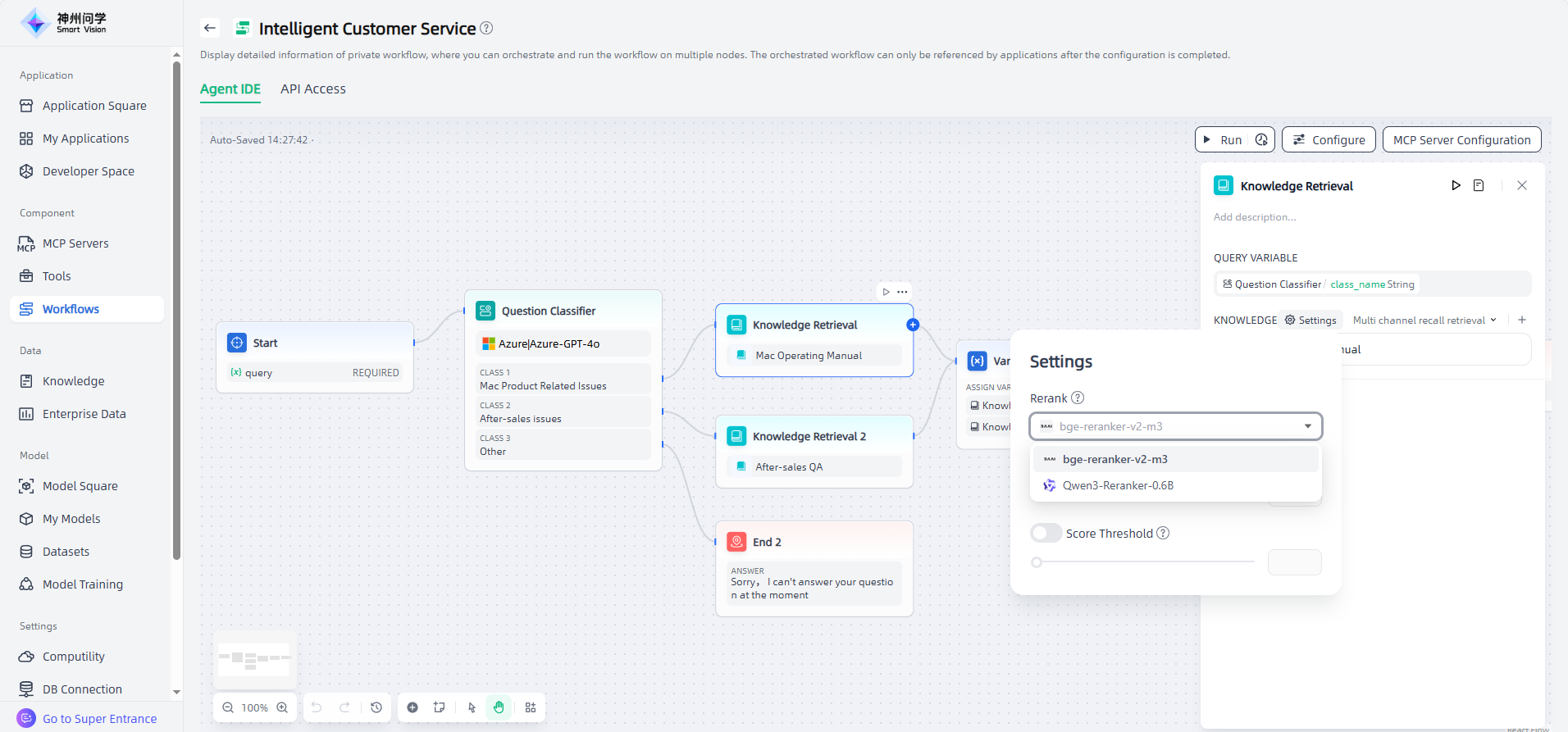

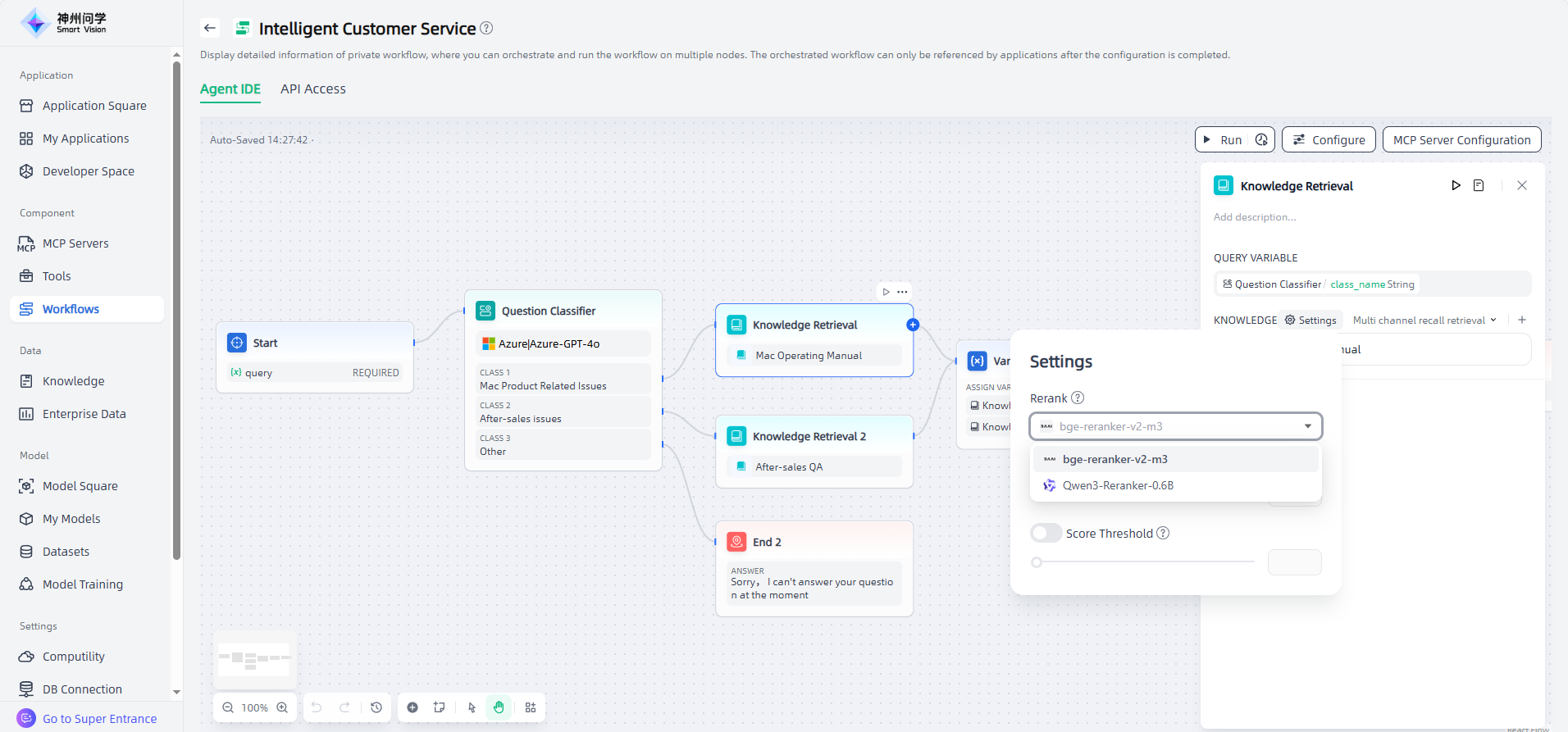

Where can I configure the rerank settings for the Knowledge Base?

Answer: Smart Vision allows users to select different Rerank models within RAG retrieval settings based on specific requirements.

a. Knowledge – Hit Testing:

b. AI Application – Orchestration – Retrieval-Augmented Generation:

c. Workflows – Knowledge Retrieval Node:

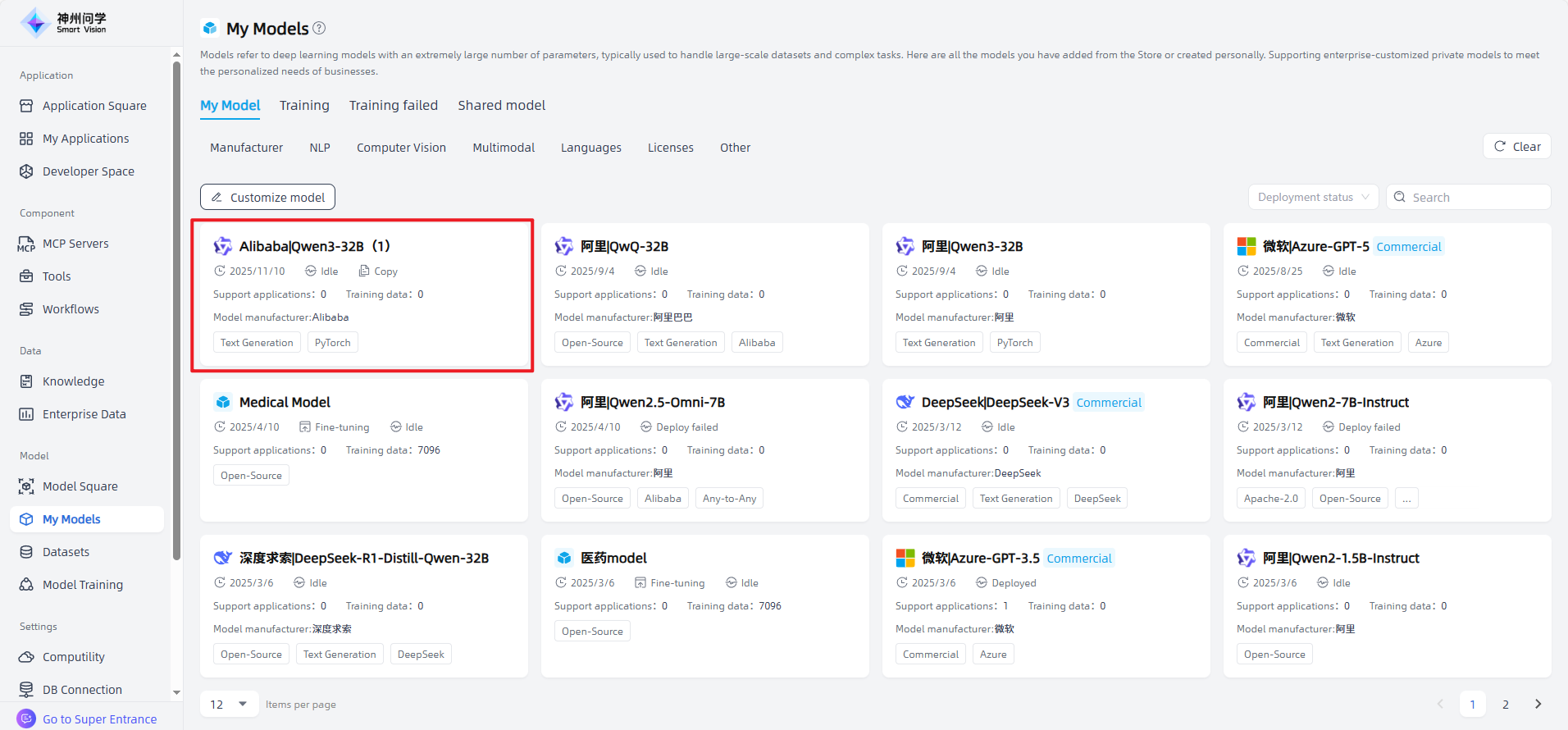

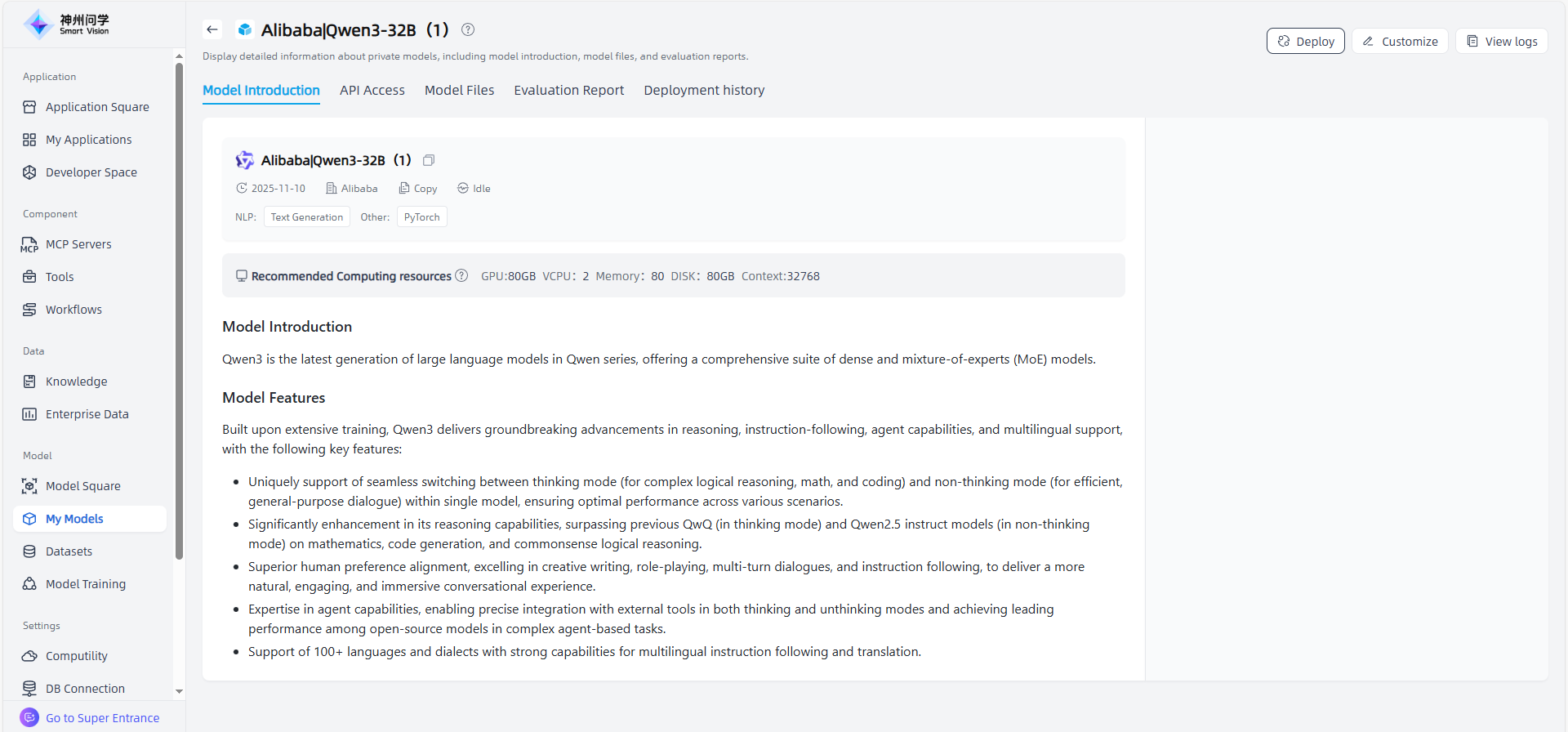

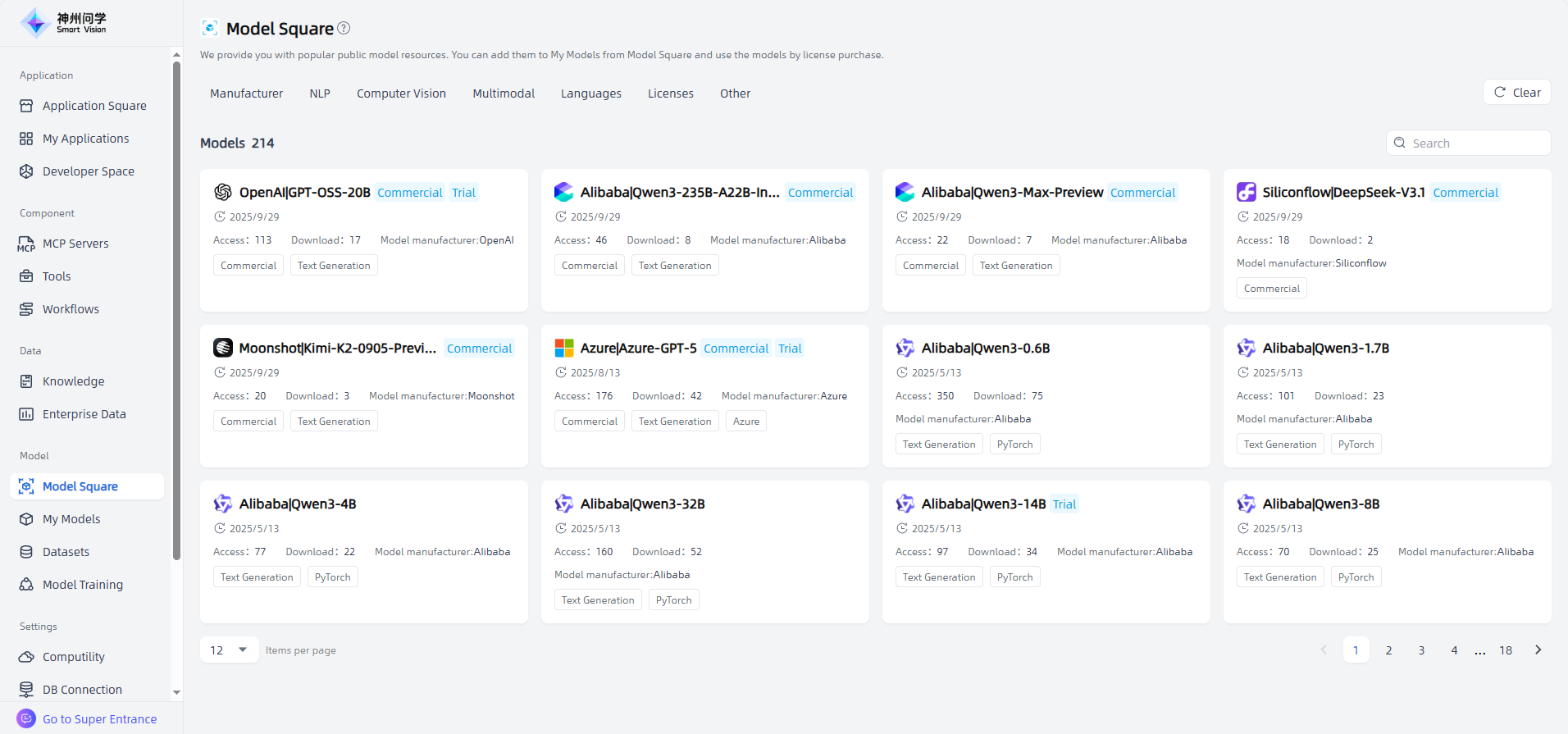

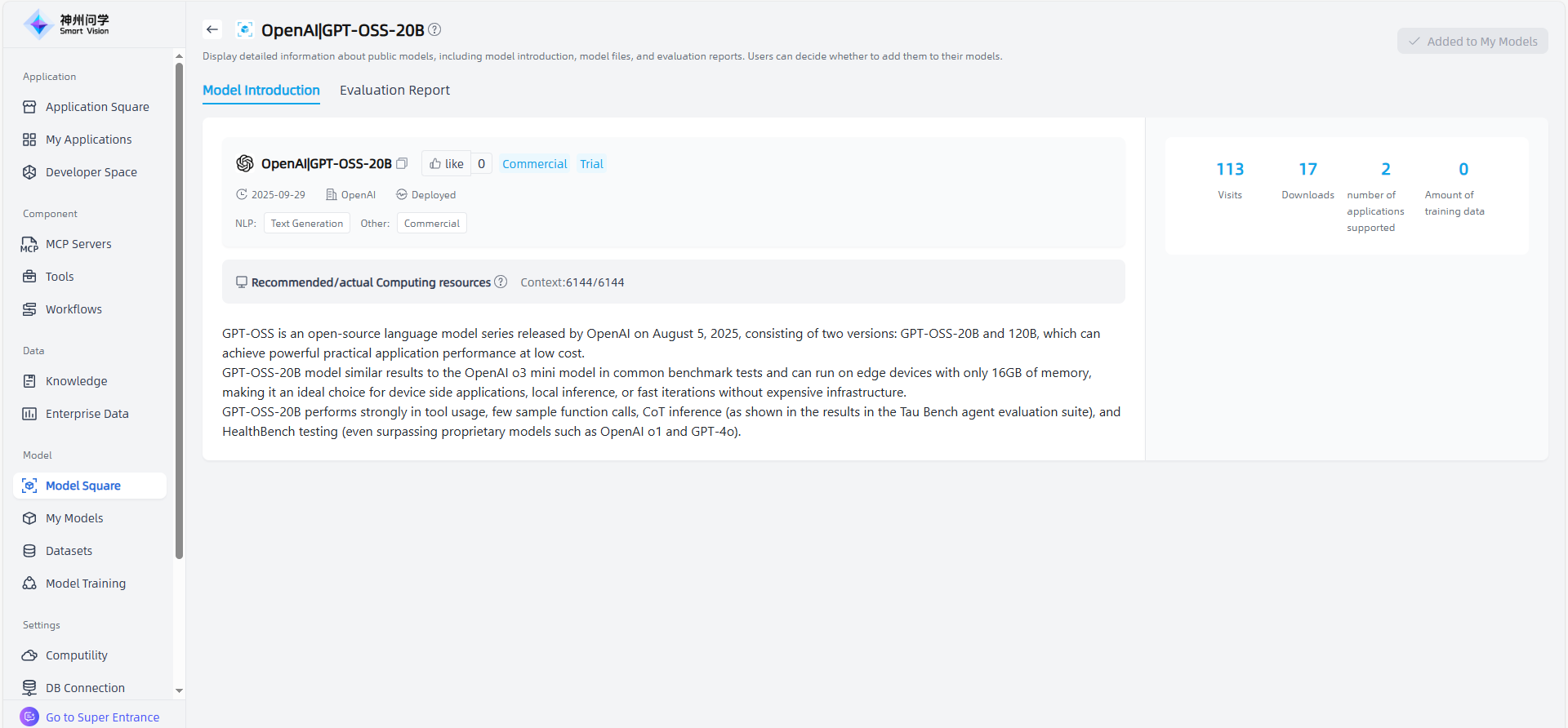

I can’t find the option to add a new model in Model Management. Can I add the Qwen3-32B-think model?

Answer: In the Model Square, users can add desired models to My Models with one click. Once added, the models can be used directly (open-source models require deployment; commercial models require key configuration). Models trained by users are also displayed under My Models.

When using Qwen3 series models on the Smart Vision , users can flexibly choose whether to enable deep thinking mode.

How do I choose the right model for different scenarios?

Answer: Smart Vision provides a variety of open-source and commercial models. All models you’ve added from the Model Square, as well as customized or trained models, are displayed under My Models.

By clicking on a model, you can access its details page to view an introduction, resource requirements, and performance evaluations to determine suitability. If general models do not meet your requirements, you can also custom-train private models using your own data to fit diverse business scenarios.

Additionally, you can trial models or preview them in AI applications (with configurable model parameters) to select the most suitable one for your use case.

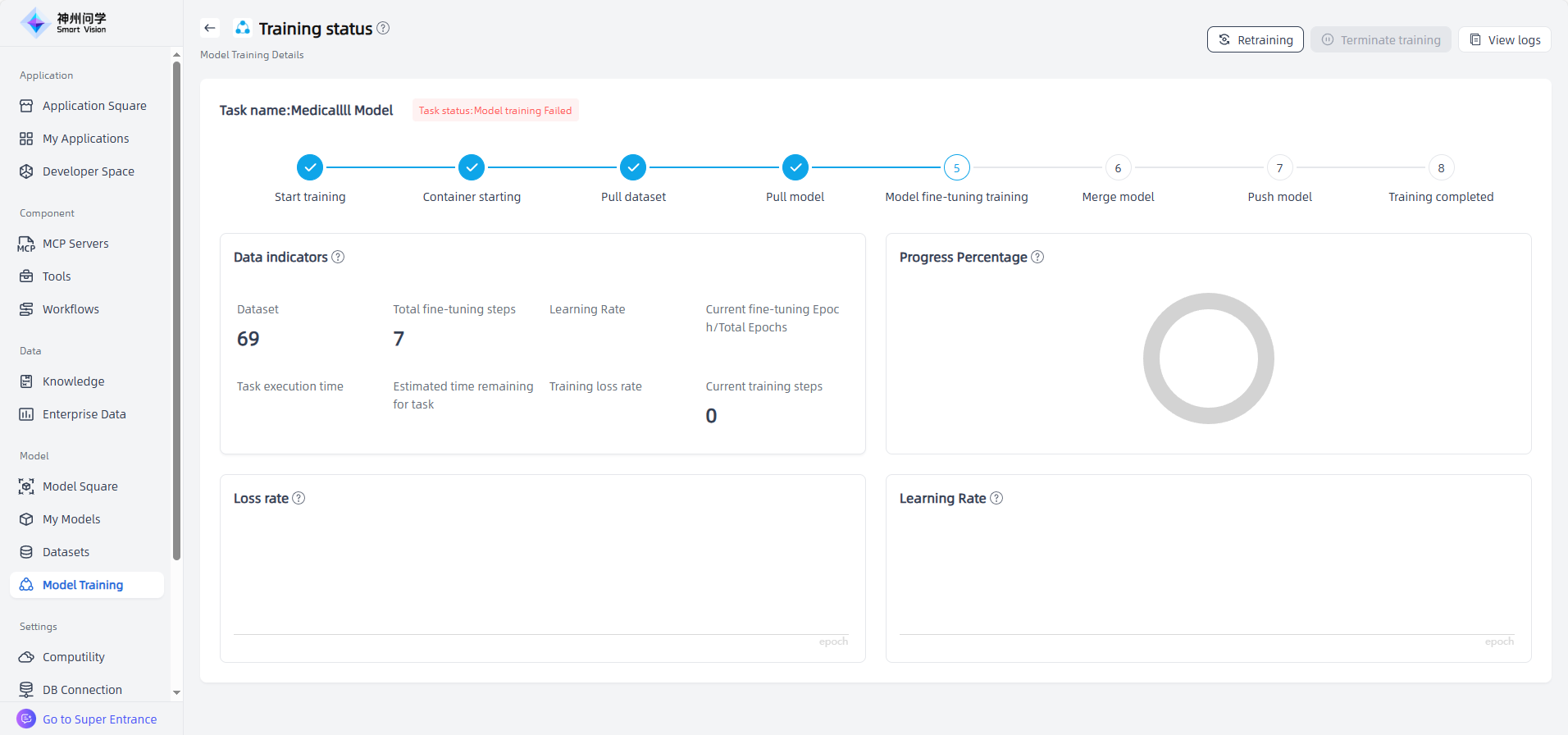

When model training fails, how can I identify the cause?

Answer: When training fails, you can find the failed model under My Models – Training Failed. Click to enter the Training Details page to view task status and logs, identify the issue, and then retrain or start a new training task.

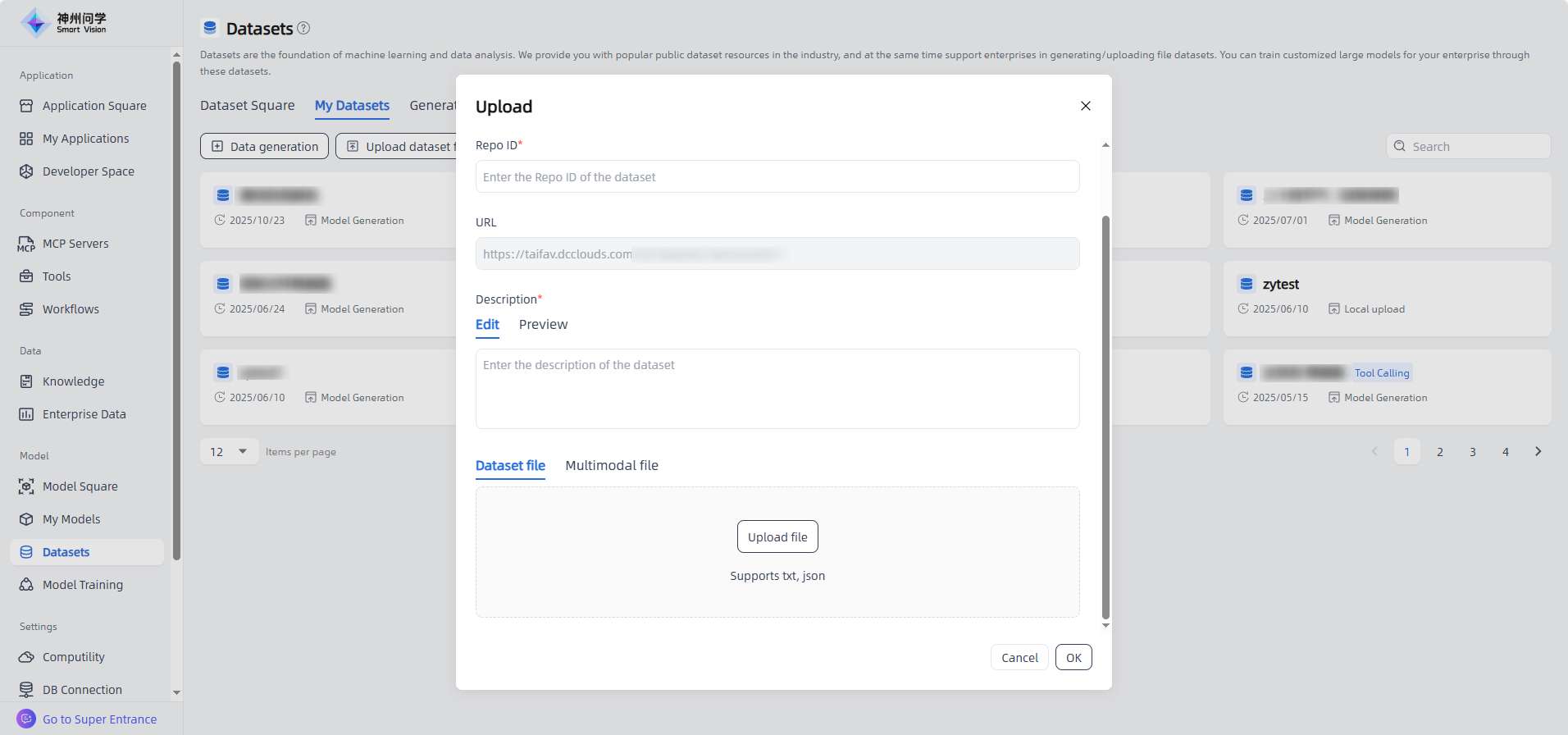

What are the requirements for uploading dataset files?

Answer: Smart Vision currently supports txt and json file formats. Each file must be under 100 MB. Please ensure your dataset file meets these requirements before uploading.